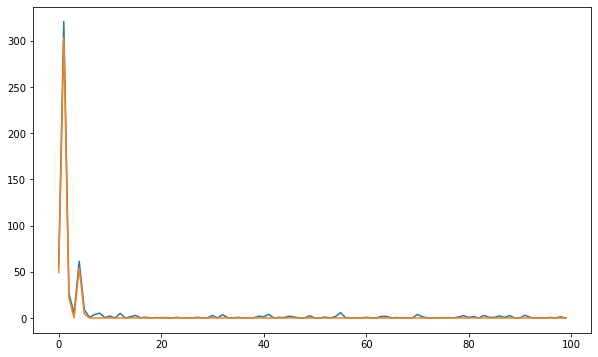

{'muhat': array([ 6.42453566, -18.27981487, -5.36813323, 0. ,

-7.57307354, 0.69758938, -2.55666216, -0. ,

0. , -0. , 0. , 0. ,

0. , -0. , -0. , -0. ,

0. , -0. , 0. , -0. ,

-0. , -0. , 0. , 0. ,

-0. , -0. , 0. , 0. ,

-0. , 0. , 0. , 0. ,

0. , -0. , 0. , 0. ,

-0. , 0. , -0. , 0. ,

-0. , -0. , -0. , -0. ,

-0. , 0. , -0. , -0. ,

0. , 0. , 0. , -0. ,

-0. , 0. , 0. , -0. ,

-0. , -0. , 0. , 0. ,

-0. , -0. , 0. , 0. ,

0. , 0. , 0. , -0. ,

-0. , 0. , 0. , -0. ,

-0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. ,

-0. , 0. , 0. , 0. ,

-0. , 0. , 0. , 0. ,

-0. , 0. , 0. , -0. ,

0. , 0. , -0. , 0. ,

0. , 0. , -0. , -0. ]),

'x': array([ 7.96090601e+00, -2.17286129e+01, -6.73409067e+00, 1.16066347e+00,

-9.29471964e+00, 2.75532680e+00, -3.58570868e+00, -7.13994439e-01,

4.58504860e-01, -2.02885504e-01, 1.92986541e+00, 6.55340952e-02,

5.80257166e-01, -4.08419081e-01, -2.98559300e-01, -7.77532045e-02,

1.41066070e+00, -4.12602244e-01, 9.97319572e-01, -1.84618056e-01,

-1.01787292e+00, -1.31628173e+00, 3.68199086e-01, 2.44761154e-01,

-9.91785126e-01, -2.66537481e-01, 4.72120174e-01, 6.24460631e-01,

-3.22887073e-01, 8.42601787e-01, 1.41725882e+00, 6.74331215e-01,

1.35566126e+00, -9.77323818e-01, 1.97208316e+00, 1.56210807e-02,

-1.52605085e+00, 1.00557005e+00, -6.75367496e-01, 9.50273574e-01,

-6.74650304e-01, -1.23815087e-01, -2.49676097e-01, -5.59795567e-01,

-8.98892940e-01, 7.57776686e-01, -3.49052620e-01, -9.37666511e-01,

8.71954327e-02, 5.47814218e-01, 4.77252803e-02, -1.46828352e+00,

-9.30857491e-01, 3.28800952e-01, 2.40925420e-01, -7.89089808e-01,

-8.74382159e-01, -1.01778695e+00, 5.70315993e-01, 5.27018835e-01,

-9.86879892e-01, -8.28525506e-01, 6.98180547e-01, 1.47166419e-01,

5.29873400e-01, 1.45358366e-01, 5.76151265e-01, -3.91087511e-01,

-1.07801307e+00, 5.96031864e-01, 8.23746723e-01, -4.31143439e-01,

-2.52209282e-01, 1.12542209e-01, 8.29350466e-03, 1.05791488e+00,

6.25175011e-01, 2.10597487e-01, 6.63196692e-01, 5.10036820e-01,

-7.20199340e-01, 1.44715172e-01, 1.00295048e+00, 1.17873613e+00,

-1.88134284e+00, 1.05213248e+00, 3.22540435e-01, 1.78509023e+00,

-1.36083714e-01, 1.73329388e-02, 6.72531590e-01, -7.30082711e-02,

7.00278667e-01, 7.84248744e-01, -7.87404812e-01, 2.89861256e-03,

1.20609925e+00, 1.00302915e+00, -3.84288222e-01, -7.96168316e-01]),

'threshold.sdevscale': 2.695897994795814,

'threshold.origscale': 2.32141951295113,

'prior': 'laplace',

'w': 0.12797891964122715,

'a': 0.5,

'bayesfac': False,

'sdev': 0.8610932303197002,

'threshrule': 'median'}