import torch

from fastai.vision.all import *

import cv2[CAM]HCAM_ebayes

CAM

import

import numpy as npimport os

os.environ['CUDA_LAUNCH_BLOCKING'] = "1"

os.environ["CUDA_VISIBLE_DEVICES"] = "0"import cv2

import numpy as np

import matplotlib.pyplot as plt

from PIL import ImageDraw

from PIL import ImageFont

from PIL import ImageFile

from PIL import Image

ImageFile.LOAD_TRUNCATED_IMAGES = Truefrom torchvision.utils import save_image

import osimport rpy2

import rpy2.robjects as ro

from rpy2.robjects.vectors import FloatVector

from rpy2.robjects.packages import importrdef label_func(f):

if f[0].isupper():

return 'cat'

else:

return 'dog' Simulation

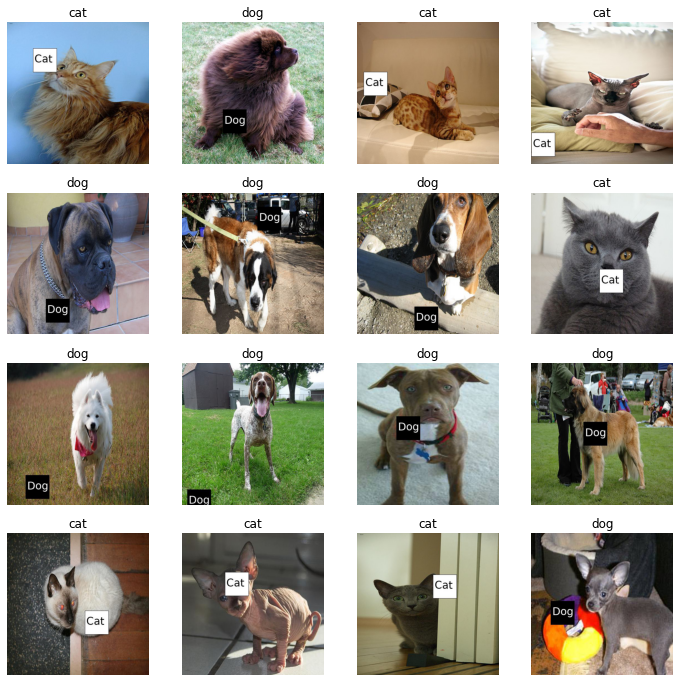

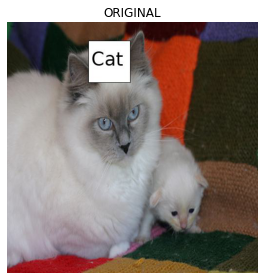

- 여기서는 랜덤박스가 추가된 개/고양이 그림에 대해 CAM을 진행한 결과를 확인함

(1) 랜덤박스가 들어간 개 고양이 그림

path=Path('random_pet_one') #랜덤박스넣은사진path.ls()(#7391) [Path('random_pet_one/Bombay_13.jpg'),Path('random_pet_one/beagle_193.jpg'),Path('random_pet_one/Ragdoll_8.jpg'),Path('random_pet_one/boxer_106.jpg'),Path('random_pet_one/keeshond_56.jpg'),Path('random_pet_one/american_pit_bull_terrier_162.jpg'),Path('random_pet_one/saint_bernard_136.jpg'),Path('random_pet_one/staffordshire_bull_terrier_76.jpg'),Path('random_pet_one/pug_173.jpg'),Path('random_pet_one/american_pit_bull_terrier_117.jpg')...]files=get_image_files(path)dls=ImageDataLoaders.from_name_func(path,files,label_func,item_tfms=Resize(512)) dls.show_batch(max_n=16)

(2) 학습

lrnr=cnn_learner(dls,resnet34,metrics=error_rate)

lrnr.fine_tune(1)/home/csy/anaconda3/envs/temp_csy/lib/python3.8/site-packages/fastai/vision/learner.py:288: UserWarning: `cnn_learner` has been renamed to `vision_learner` -- please update your code

warn("`cnn_learner` has been renamed to `vision_learner` -- please update your code")

/home/csy/anaconda3/envs/temp_csy/lib/python3.8/site-packages/torchvision/models/_utils.py:208: UserWarning: The parameter 'pretrained' is deprecated since 0.13 and may be removed in the future, please use 'weights' instead.

warnings.warn(

/home/csy/anaconda3/envs/temp_csy/lib/python3.8/site-packages/torchvision/models/_utils.py:223: UserWarning: Arguments other than a weight enum or `None` for 'weights' are deprecated since 0.13 and may be removed in the future. The current behavior is equivalent to passing `weights=ResNet34_Weights.IMAGENET1K_V1`. You can also use `weights=ResNet34_Weights.DEFAULT` to get the most up-to-date weights.

warnings.warn(msg)

0.00% [0/1 00:00<?]

| epoch | train_loss | valid_loss | error_rate | time |

|---|

92.39% [85/92 39:36<03:15 0.1377]

net1=lrnr.model[0]

net2=lrnr.model[1] net2 = torch.nn.Sequential(

torch.nn.AdaptiveAvgPool2d(output_size=1),

torch.nn.Flatten(),

torch.nn.Linear(512,out_features=2,bias=False))net=torch.nn.Sequential(net1,net2)lrnr2=Learner(dls,net,metrics=accuracy) lrnr2.fine_tune(10) interp = ClassificationInterpretation.from_learner(lrnr2)

interp.plot_confusion_matrix()

66.67% [16/24 03:19<01:39 0.0000]

torch.save(lrnr2, './model/HCAM.pth')# torch.save(camimg, './model/HCAM_caming.pth')# torch.load(PATH) 불러오는 법torch.save(lrnr2.state_dict(), './model/HCAM_parameter.pth')(3) CAM 결과

x, = first(dls.test_dl([PILImage.create(get_image_files(path)[2])]))camimg = torch.einsum('ij,jkl -> ikl', net2[2].weight, net1(x).squeeze())x.shapetorch.Size([1, 3, 512, 512])camimg.shapetorch.Size([2, 16, 16])fig, (ax1,ax2) = plt.subplots(1,2)

#

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.imshow(camimg[0].to("cpu").detach(),alpha=0.3,extent=(0,512,512,0),interpolation='bilinear',cmap='magma')

#

dls.train.decode((x,))[0].squeeze().show(ax=ax2)

ax2.imshow(camimg[1].to("cpu").detach(),alpha=0.3,extent=(0,512,512,0),interpolation='bilinear',cmap='magma')

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()

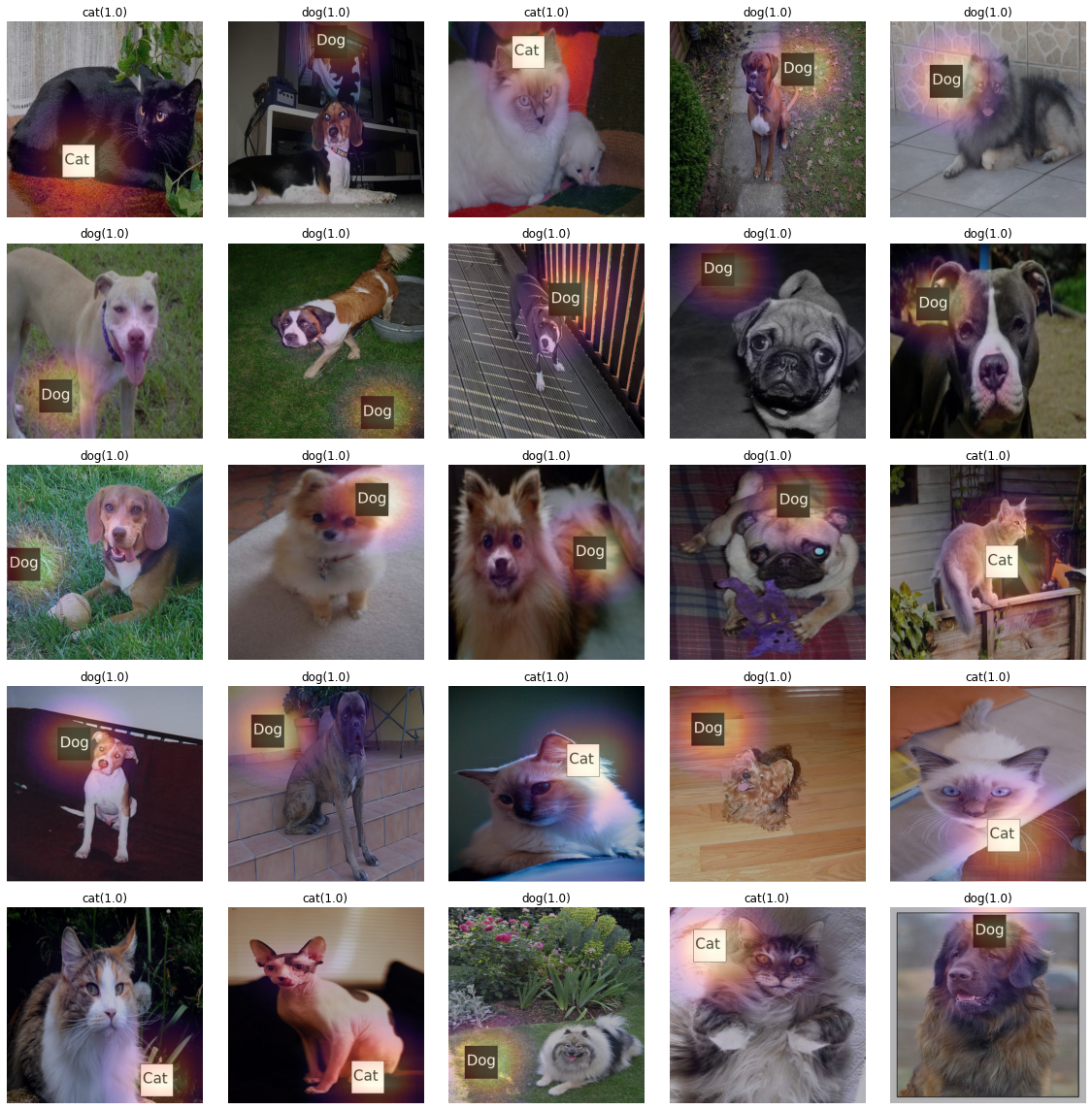

fig, ax = plt.subplots(5,5)

k=0

for i in range(5):

for j in range(5):

x, = first(dls.test_dl([PILImage.create(get_image_files(path)[k])]))

camimg = torch.einsum('ij,jkl -> ikl', net2[2].weight, net1(x).squeeze())

a,b = net(x).tolist()[0]

catprob, dogprob = np.exp(a)/ (np.exp(a)+np.exp(b)) , np.exp(b)/ (np.exp(a)+np.exp(b))

if catprob>dogprob:

dls.train.decode((x,))[0].squeeze().show(ax=ax[i][j])

ax[i][j].imshow(camimg[0].to("cpu").detach(),alpha=0.3,extent=(0,512,512,0),interpolation='bilinear',cmap='magma')

ax[i][j].set_title("cat(%s)" % catprob.round(5))

else:

dls.train.decode((x,))[0].squeeze().show(ax=ax[i][j])

ax[i][j].imshow(camimg[1].to("cpu").detach(),alpha=0.3,extent=(0,512,512,0),interpolation='bilinear',cmap='magma')

ax[i][j].set_title("dog(%s)" % dogprob.round(5))

k=k+1

fig.set_figwidth(16)

fig.set_figheight(16)

fig.tight_layout()

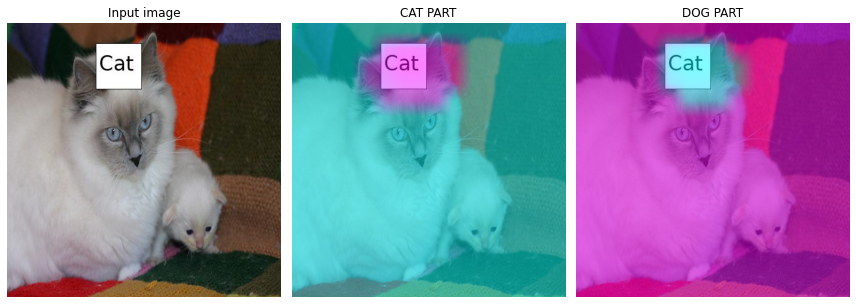

Step by step

CAT

x, = first(dls.test_dl([PILImage.create(get_image_files(path)[2])]))camimg = torch.einsum('ij,jkl -> ikl', net2[2].weight, net1(x).squeeze())ebayesthresh = importr('EbayesThresh').ebayesthresh

power_threshed=np.array(ebayesthresh(FloatVector(torch.tensor(camimg[0].detach().reshape(-1))**2)))

ybar_threshed = np.where(power_threshed>1600,torch.tensor(camimg[0].detach().reshape(-1)),0)

ybar_threshed = torch.tensor(ybar_threshed.reshape(16,16))

power_threshed2=np.array(ebayesthresh(FloatVector(torch.tensor(camimg[1].detach().reshape(-1))**2)))

ybar_threshed2 = np.where(power_threshed2>2100,torch.tensor(camimg[1].detach().reshape(-1)),0)

ybar_threshed2 = torch.tensor(ybar_threshed2.reshape(16,16))UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

power_threshed=np.array(ebayesthresh(FloatVector(torch.tensor(camimg[0].detach().reshape(-1))**2)))

<ipython-input-322-3ac1a13f4724>:4: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

ybar_threshed = np.where(power_threshed>1600,torch.tensor(camimg[0].detach().reshape(-1)),0)

<ipython-input-322-3ac1a13f4724>:7: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

power_threshed2=np.array(ebayesthresh(FloatVector(torch.tensor(camimg[1].detach().reshape(-1))**2)))

<ipython-input-322-3ac1a13f4724>:8: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

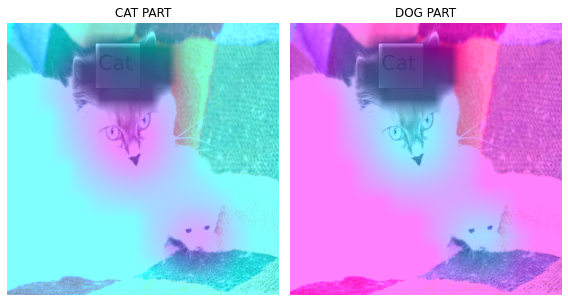

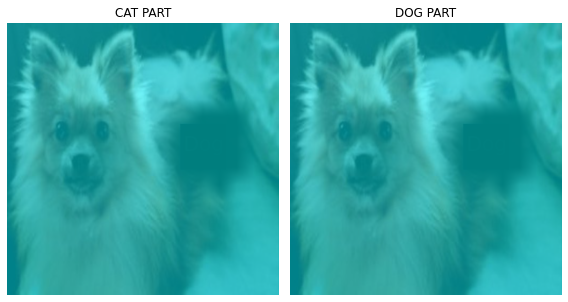

ybar_threshed2 = np.where(power_threshed2>2100,torch.tensor(camimg[1].detach().reshape(-1)),0)fig, (ax1,ax2,ax3) = plt.subplots(1,3)

#

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.set_title("Input image")

#

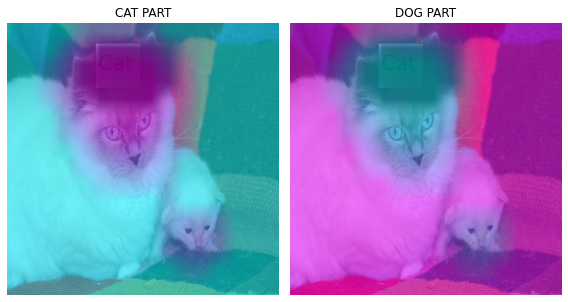

dls.train.decode((x,))[0].squeeze().show(ax=ax2)

ax2.imshow((ybar_threshed).to("cpu").detach(),alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("CAT PART")

#

dls.train.decode((x,))[0].squeeze().show(ax=ax3)

ax3.imshow((ybar_threshed2).to("cpu").detach(),alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax3.set_title("DOG PART")

#

fig.set_figwidth(12)

fig.set_figheight(12)

fig.tight_layout()

- 판단 근거가 강할 수록 파란색 -> 보라색

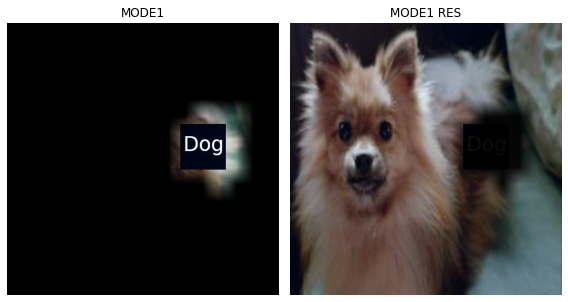

a,b = net(x).tolist()[0]np.exp(a)/ (np.exp(a)+np.exp(b)) , np.exp(b)/ (np.exp(a)+np.exp(b))(0.9999999986248147, 1.3751852434027162e-09)mode 1

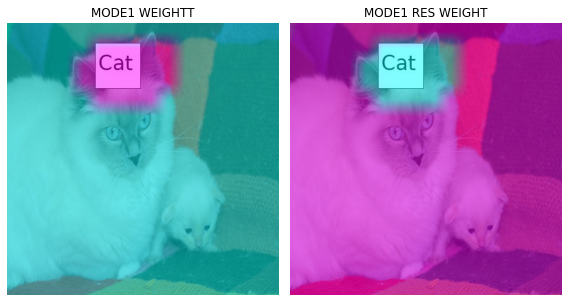

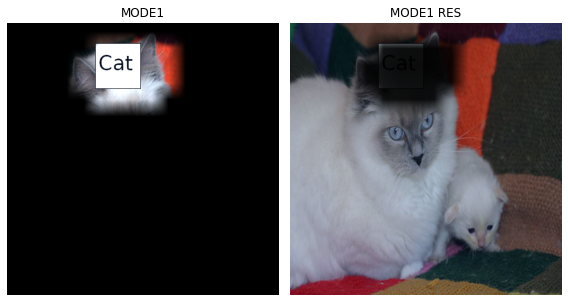

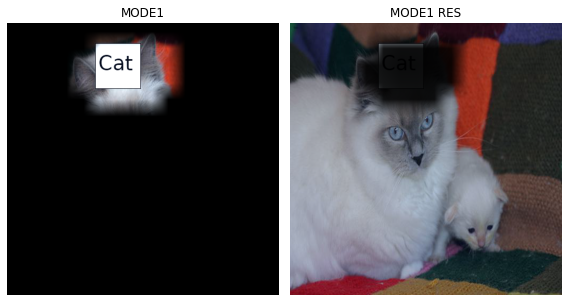

# test=camimg_o[0]-torch.min(camimg_o[0])

A1=torch.exp(-0.05*(ybar_threshed))

A2 = 1 - A1fig, (ax1,ax2) = plt.subplots(1,2)

#

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.imshow(A2.data.to("cpu").detach(),alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax1.set_title("MODE1 WEIGHTT")

#

dls.train.decode((x,))[0].squeeze().show(ax=ax2)

ax2.imshow(A1.data.to("cpu").detach(),alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("MODE1 RES WEIGHT")

#

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()

# mode 1 res

X1=np.array(A1.to("cpu").detach(),dtype=np.float32)

Y1=torch.Tensor(cv2.resize(X1,(512,512),interpolation=cv2.INTER_LINEAR))

x1=x.squeeze().to('cpu')*Y1-torch.min(x.squeeze().to('cpu'))*Y1

# mode 1

X12=np.array(A2.to("cpu").detach(),dtype=np.float32)

Y12=torch.Tensor(cv2.resize(X12,(512,512),interpolation=cv2.INTER_LINEAR))

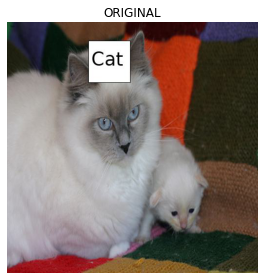

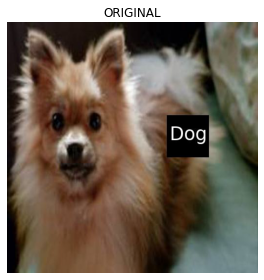

x12=x.squeeze().to('cpu')*Y12-torch.min(x.squeeze().to('cpu'))*Y12- 1st CAM 분리

fig, (ax1) = plt.subplots(1,1)

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.set_title("ORIGINAL")

fig.set_figwidth(4)

fig.set_figheight(4)

fig.tight_layout()

#

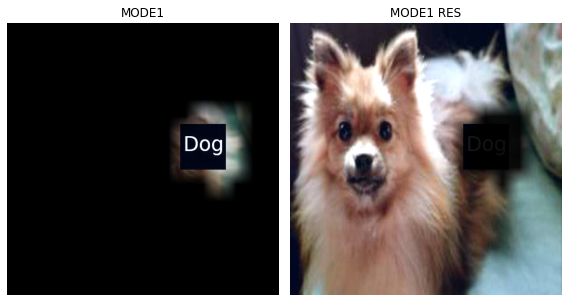

fig, (ax1, ax2) = plt.subplots(1,2)

(x12*0.35).squeeze().show(ax=ax1) #MODE1

(x1*0.2).squeeze().show(ax=ax2) #MODE1_res

ax1.set_title("MODE1")

ax2.set_title("MODE1 RES")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

x1 = x1.reshape(1,3,512,512)net1.to('cpu')

net2.to('cpu')Sequential(

(0): AdaptiveAvgPool2d(output_size=1)

(1): Flatten(start_dim=1, end_dim=-1)

(2): Linear(in_features=512, out_features=2, bias=False)

)camimg1 = torch.einsum('ij,jkl -> ikl', net2[2].weight, net1(x1).squeeze())power_threshed3=np.array(ebayesthresh(FloatVector(torch.tensor(camimg1[0].detach().reshape(-1))**2)))

ybar_threshed3 = np.where(power_threshed3>10,torch.tensor(camimg1[0].detach().reshape(-1)),0)

ybar_threshed3 = torch.tensor(ybar_threshed3.reshape(16,16))

power_threshed4=np.array(ebayesthresh(FloatVector(torch.tensor(camimg1[1].detach().reshape(-1))**2)))

ybar_threshed4 = np.where(power_threshed4>10,torch.tensor(camimg1[1].detach().reshape(-1)),0)

ybar_threshed4 = torch.tensor(ybar_threshed4.reshape(16,16))UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

power_threshed3=np.array(ebayesthresh(FloatVector(torch.tensor(camimg1[0].detach().reshape(-1))**2)))

<ipython-input-333-292f842a7fbc>:2: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

ybar_threshed3 = np.where(power_threshed3>10,torch.tensor(camimg1[0].detach().reshape(-1)),0)

<ipython-input-333-292f842a7fbc>:5: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

power_threshed4=np.array(ebayesthresh(FloatVector(torch.tensor(camimg1[1].detach().reshape(-1))**2)))

<ipython-input-333-292f842a7fbc>:6: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

ybar_threshed4 = np.where(power_threshed4>10,torch.tensor(camimg1[1].detach().reshape(-1)),0)a1,b1 = net(x1).tolist()[0]np.exp(a1)/ (np.exp(a1)+np.exp(b1)) , np.exp(b1)/ (np.exp(a1)+np.exp(b1))(0.9993523558198389, 0.0006476441801611291)- mode1 res

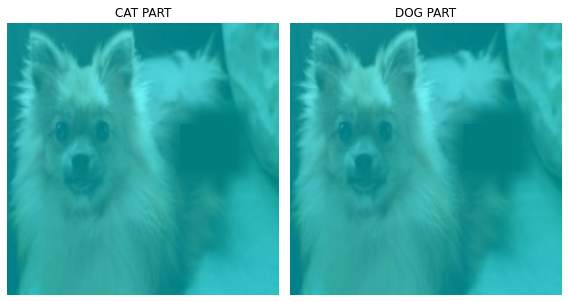

fig, (ax1,ax2) = plt.subplots(1,2)

#

(x1*0.25).squeeze().show(ax=ax1)

ax1.imshow(ybar_threshed3,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax1.set_title("CAT PART")

#

(x1*0.25).squeeze().show(ax=ax2)

ax2.imshow(ybar_threshed4,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("DOG PART")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

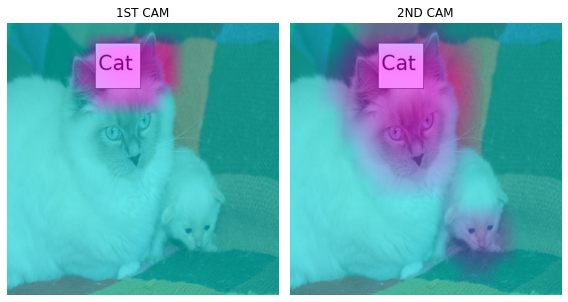

- 첫번째 CAM 결과와 비교

fig, (ax1,ax2) = plt.subplots(1,2)

#

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.imshow(ybar_threshed,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax1.set_title("1ST CAM")

#

dls.train.decode((x,))[0].squeeze().show(ax=ax2)

ax2.imshow(ybar_threshed3,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("2ND CAM")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()

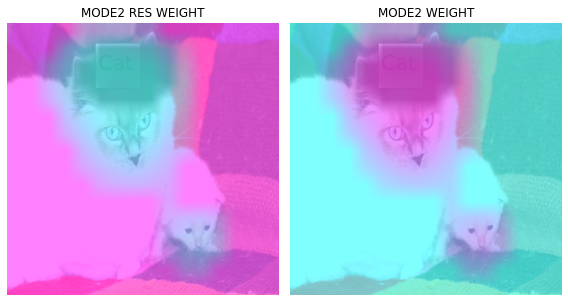

- 2nd CAM 분리

# test1=camimg1[0]-torch.min(camimg1[0])

A3 = torch.exp(-0.05*(ybar_threshed3))

A4 = 1 - A3fig, (ax1,ax2) = plt.subplots(1,2)

#

x1.squeeze().show(ax=ax2)

dls.train.decode((x1,))[0].squeeze().show(ax=ax1)

ax1.imshow(A3.data.to("cpu").detach(),alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax1.set_title("MODE2 RES WEIGHT")

#

x1.squeeze().show(ax=ax2)

dls.train.decode((x1,))[0].squeeze().show(ax=ax2)

ax2.imshow(A4.data.to("cpu").detach(),alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("MODE2 WEIGHT")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

X2=np.array(A3.to("cpu").detach(),dtype=np.float32)

Y2=torch.Tensor(cv2.resize(X2,(512,512),interpolation=cv2.INTER_LINEAR))

x2=(x1)*Y2-torch.min((x1)*Y2)

X22=np.array(A4.to("cpu").detach(),dtype=np.float32)

Y22=torch.Tensor(cv2.resize(X22,(512,512),interpolation=cv2.INTER_LINEAR))

x22=(x1)*Y22-torch.min((x1)*Y22)fig, (ax1) = plt.subplots(1,1)

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.set_title("ORIGINAL")

fig.set_figwidth(4)

fig.set_figheight(4)

fig.tight_layout()

#

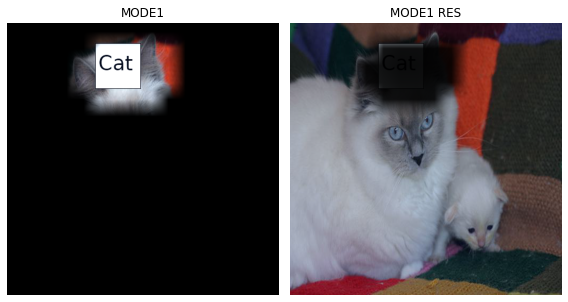

fig, (ax1, ax2) = plt.subplots(1,2)

(x12*0.3).squeeze().show(ax=ax1) #MODE1

(x1*0.2).squeeze().show(ax=ax2) #MODE1_res

ax1.set_title("MODE1")

ax2.set_title("MODE1 RES")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()

#

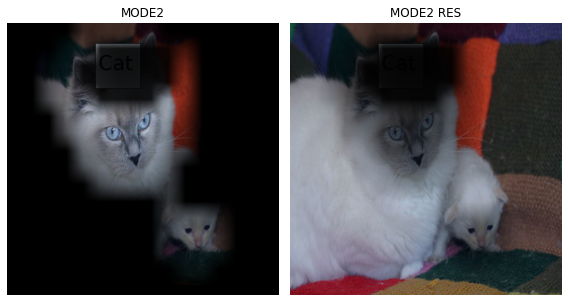

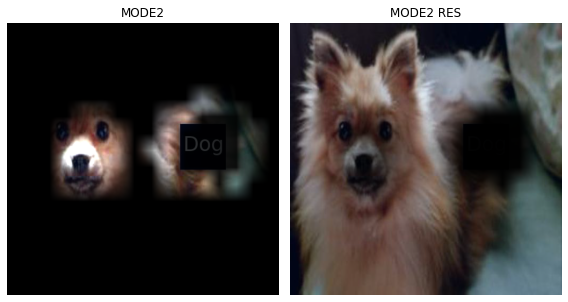

fig, (ax1, ax2) = plt.subplots(1,2)

(x22*0.5).squeeze().show(ax=ax1) #MODE2

(x2*0.2).squeeze().show(ax=ax2) #MODE2_res

ax1.set_title("MODE2")

ax2.set_title("MODE2 RES")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

x2 = x2.reshape(1,3,512,512)net1.to('cpu')

net2.to('cpu')Sequential(

(0): AdaptiveAvgPool2d(output_size=1)

(1): Flatten(start_dim=1, end_dim=-1)

(2): Linear(in_features=512, out_features=2, bias=False)

)camimg2 = torch.einsum('ij,jkl -> ikl', net2[2].weight, net1(x2).squeeze())power_threshed5=np.array(ebayesthresh(FloatVector(torch.tensor(camimg2[0].detach().reshape(-1))**2)))

ybar_threshed5 = np.where(power_threshed5>4,torch.tensor(camimg2[0].detach().reshape(-1)),0)

ybar_threshed5 = torch.tensor(ybar_threshed5.reshape(16,16))

power_threshed6=np.array(ebayesthresh(FloatVector(torch.tensor(camimg2[1].detach().reshape(-1))**2)))

ybar_threshed6 = np.where(power_threshed6>4,torch.tensor(camimg2[1].detach().reshape(-1)),0)

ybar_threshed6 = torch.tensor(ybar_threshed6.reshape(16,16))UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

power_threshed5=np.array(ebayesthresh(FloatVector(torch.tensor(camimg2[0].detach().reshape(-1))**2)))

<ipython-input-364-4701a2d33601>:2: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

ybar_threshed5 = np.where(power_threshed5>4,torch.tensor(camimg2[0].detach().reshape(-1)),0)

<ipython-input-364-4701a2d33601>:5: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

power_threshed6=np.array(ebayesthresh(FloatVector(torch.tensor(camimg2[1].detach().reshape(-1))**2)))

<ipython-input-364-4701a2d33601>:6: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

ybar_threshed6 = np.where(power_threshed6>4,torch.tensor(camimg2[1].detach().reshape(-1)),0)a2,b2 = net(x2).tolist()[0]

np.exp(a2)/(np.exp(a2)+np.exp(b2)), np.exp(b2)/(np.exp(a2)+np.exp(b2))(0.9923479929133789, 0.007652007086621125)- mode2 res 에 CAM 결과 올리기

fig, (ax1, ax2) = plt.subplots(1,2)

#

x2.squeeze().show(ax=ax1)

ax1.imshow(ybar_threshed5,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax1.set_title("CAT PART")

#

x2.squeeze().show(ax=ax2)

ax2.imshow(ybar_threshed6,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("DOG PART")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

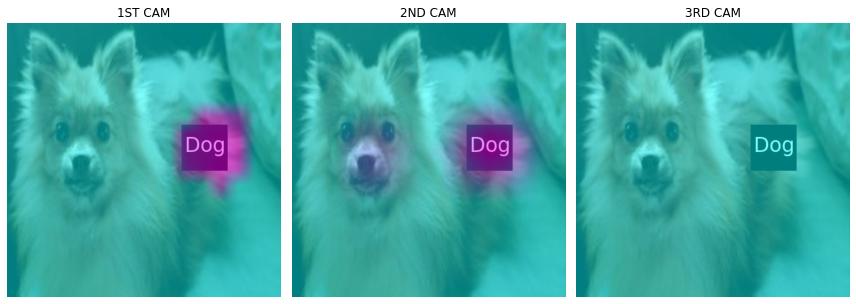

fig, (ax1,ax2,ax3) = plt.subplots(1,3)

#

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.imshow(ybar_threshed,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax1.set_title("1ST CAM")

#

dls.train.decode((x,))[0].squeeze().show(ax=ax2)

ax2.imshow(ybar_threshed3,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("2ND CAM")

#

dls.train.decode((x,))[0].squeeze().show(ax=ax3)

ax3.imshow(ybar_threshed5,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax3.set_title("3RD CAM")

fig.set_figwidth(12)

fig.set_figheight(12)

fig.tight_layout()

mode 3 만들기

# test2=camimg2[0]-torch.min(camimg2[0])A5 = torch.exp(-0.05*(ybar_threshed5))A6 = 1 - A5fig, (ax1, ax2) = plt.subplots(1,2)

#

x2.squeeze().show(ax=ax1)

ax1.imshow(ybar_threshed5,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax1.set_title("CAT PART")

#

x2.squeeze().show(ax=ax2)

ax2.imshow(ybar_threshed6,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("DOG PART")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

#mode 3 res

X3=np.array(A5.to("cpu").detach(),dtype=np.float32)

Y3=torch.Tensor(cv2.resize(X3,(512,512),interpolation=cv2.INTER_LINEAR))

x3=x2*Y3-torch.min(x2*Y3)

# mode 3

X32=np.array(A6.to("cpu").detach(),dtype=np.float32)

Y32=torch.Tensor(cv2.resize(X32,(512,512),interpolation=cv2.INTER_LINEAR))

x32=x2*Y32-torch.min(x2*Y32)fig, (ax1) = plt.subplots(1,1)

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.set_title("ORIGINAL")

fig.set_figwidth(4)

fig.set_figheight(4)

fig.tight_layout()

#

fig, (ax1, ax2) = plt.subplots(1,2)

(x12*0.3).squeeze().show(ax=ax1) #MODE1

(x1*0.2).squeeze().show(ax=ax2) #MODE1_res

ax1.set_title("MODE1")

ax2.set_title("MODE1 RES")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()

#

fig, (ax1, ax2) = plt.subplots(1,2)

(x22*0.5).squeeze().show(ax=ax1) #MODE2

(x2*0.2).squeeze().show(ax=ax2) #MODE2_res

ax1.set_title("MODE2")

ax2.set_title("MODE2 RES")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()

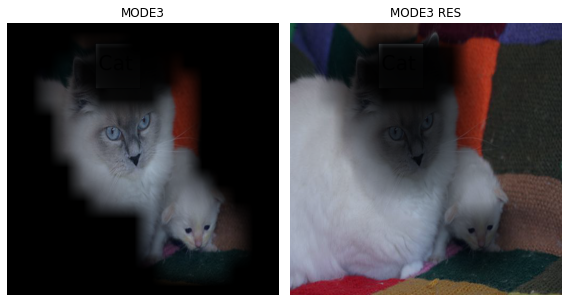

#

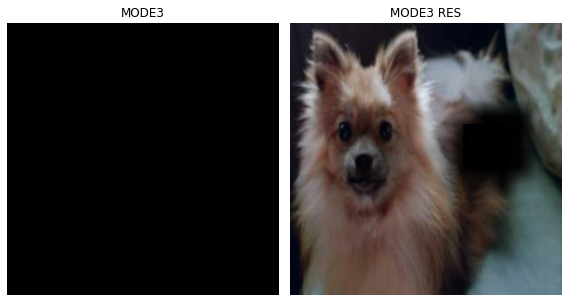

fig, (ax1, ax2) = plt.subplots(1,2)

(x32*0.8).squeeze().show(ax=ax1) #MODE3

(x3*0.2).squeeze().show(ax=ax2) #MODE3_res

ax1.set_title("MODE3")

ax2.set_title("MODE3 RES")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

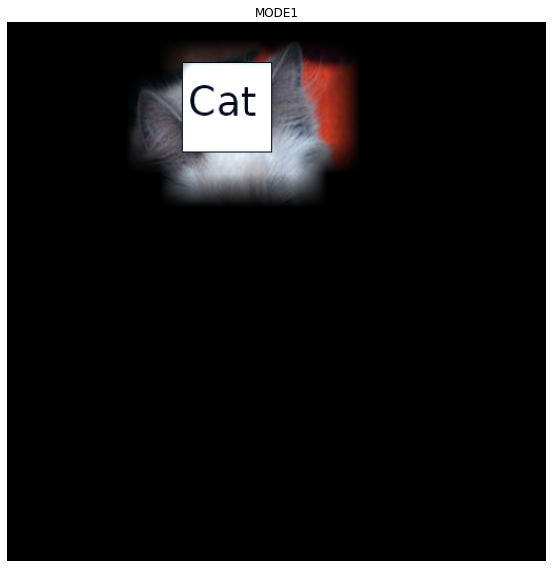

fig, (ax1) = plt.subplots(1,1)

(x12*0.3).squeeze().show(ax=ax1) #MODE1

ax1.set_title("MODE1")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

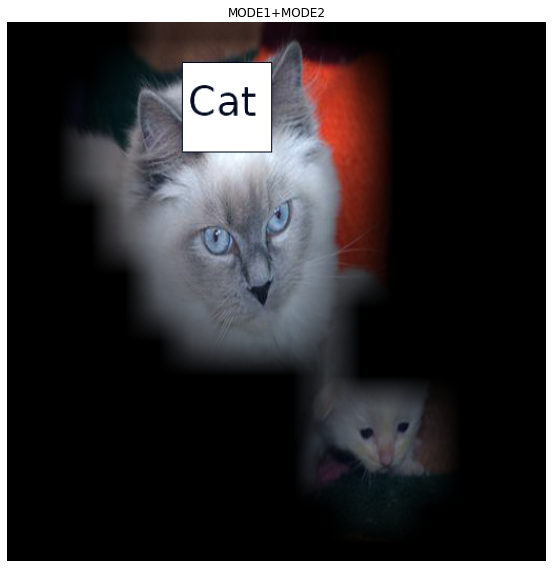

fig, (ax1) = plt.subplots(1,1)

(x12*0.3 + x22*0.5).squeeze().show(ax=ax1) #MODE1+MODE2

ax1.set_title("MODE1+MODE2")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

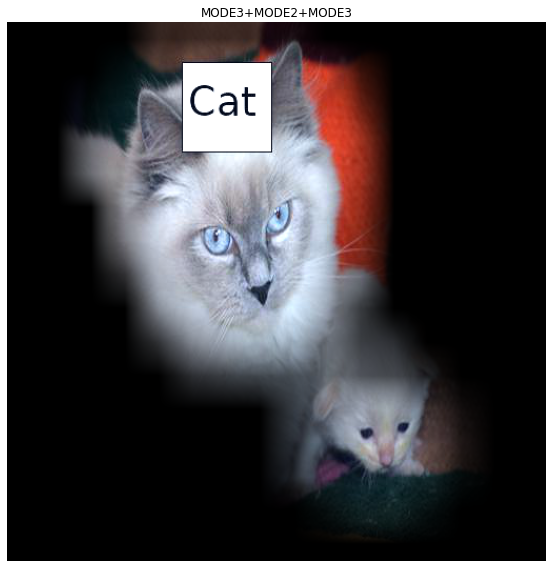

fig, (ax1) = plt.subplots(1,1)

(x12*0.3 + x22*0.5 + x32*0.5).squeeze().show(ax=ax1) #MODE1+MODE2+MODE3

ax1.set_title("MODE3+MODE2+MODE3")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

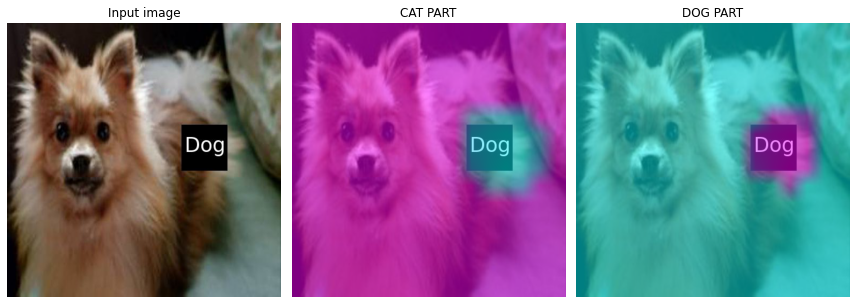

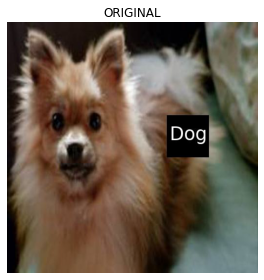

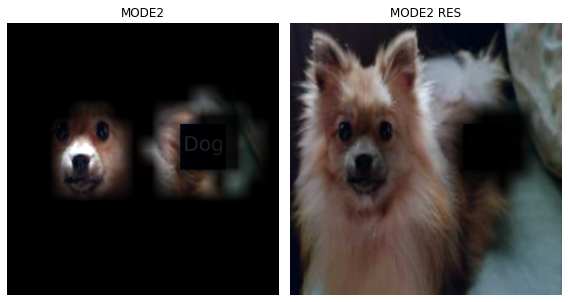

DOG

x, = first(dls.test_dl([PILImage.create(get_image_files(path)[12])]))camimg = torch.einsum('ij,jkl -> ikl', net2[2].weight, net1(x).squeeze())ebayesthresh = importr('EbayesThresh').ebayesthresh

power_threshed=np.array(ebayesthresh(FloatVector(torch.tensor(camimg[0].detach().reshape(-1))**2)))

ybar_threshed = np.where(power_threshed>1600,torch.tensor(camimg[0].detach().reshape(-1)),0)

ybar_threshed = torch.tensor(ybar_threshed.reshape(16,16))

power_threshed2=np.array(ebayesthresh(FloatVector(torch.tensor(camimg[1].detach().reshape(-1))**2)))

ybar_threshed2 = np.where(power_threshed2>2100,torch.tensor(camimg[1].detach().reshape(-1)),0)

ybar_threshed2 = torch.tensor(ybar_threshed2.reshape(16,16))UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

power_threshed=np.array(ebayesthresh(FloatVector(torch.tensor(camimg[0].detach().reshape(-1))**2)))

<ipython-input-280-3ac1a13f4724>:4: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

ybar_threshed = np.where(power_threshed>1600,torch.tensor(camimg[0].detach().reshape(-1)),0)

<ipython-input-280-3ac1a13f4724>:7: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

power_threshed2=np.array(ebayesthresh(FloatVector(torch.tensor(camimg[1].detach().reshape(-1))**2)))

<ipython-input-280-3ac1a13f4724>:8: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

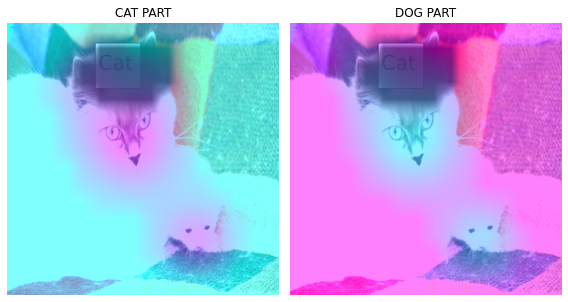

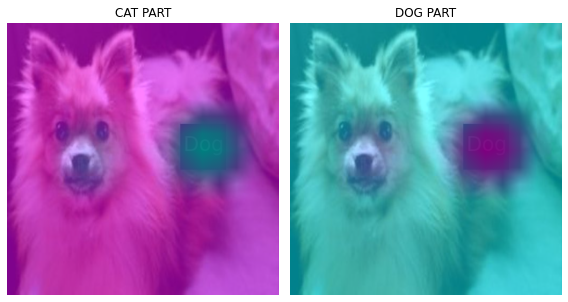

ybar_threshed2 = np.where(power_threshed2>2100,torch.tensor(camimg[1].detach().reshape(-1)),0)fig, (ax1,ax2,ax3) = plt.subplots(1,3)

#

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.set_title("Input image")

#

dls.train.decode((x,))[0].squeeze().show(ax=ax2)

ax2.imshow((ybar_threshed).to("cpu").detach(),alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("CAT PART")

#

dls.train.decode((x,))[0].squeeze().show(ax=ax3)

ax3.imshow((ybar_threshed2).to("cpu").detach(),alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax3.set_title("DOG PART")

#

fig.set_figwidth(12)

fig.set_figheight(12)

fig.tight_layout()

- 판단 근거가 강할 수록 파란색 -> 보라색

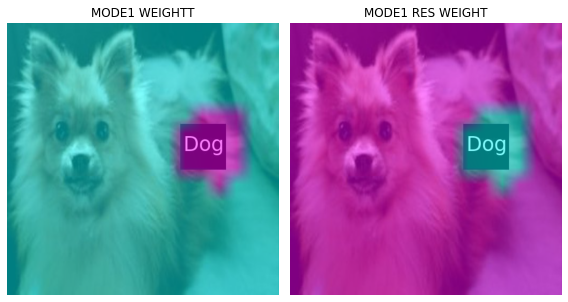

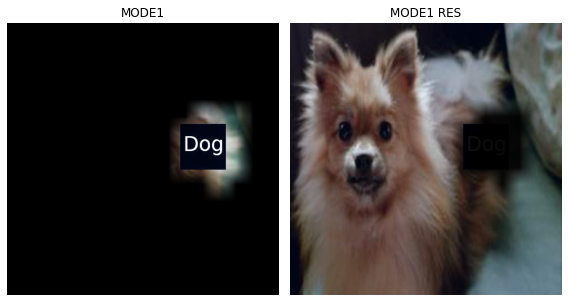

a,b = net(x).tolist()[0]np.exp(a)/ (np.exp(a)+np.exp(b)) , np.exp(b)/ (np.exp(a)+np.exp(b))(2.3695016723215803e-09, 0.9999999976304983)mode 1

# test=camimg_o[0]-torch.min(camimg_o[0])

A1=torch.exp(-0.05*(ybar_threshed2))

A2 = 1 - A1fig, (ax1,ax2) = plt.subplots(1,2)

#

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.imshow(A2.data.to("cpu").detach(),alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax1.set_title("MODE1 WEIGHTT")

#

dls.train.decode((x,))[0].squeeze().show(ax=ax2)

ax2.imshow(A1.data.to("cpu").detach(),alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("MODE1 RES WEIGHT")

#

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()

# mode 1 res

X1=np.array(A1.to("cpu").detach(),dtype=np.float32)

Y1=torch.Tensor(cv2.resize(X1,(512,512),interpolation=cv2.INTER_LINEAR))

x1=x.squeeze().to('cpu')*Y1-torch.min(x.squeeze().to('cpu'))*Y1

# mode 1

X12=np.array(A2.to("cpu").detach(),dtype=np.float32)

Y12=torch.Tensor(cv2.resize(X12,(512,512),interpolation=cv2.INTER_LINEAR))

x12=x.squeeze().to('cpu')*Y12-torch.min(x.squeeze().to('cpu'))*Y12- 1st CAM 분리

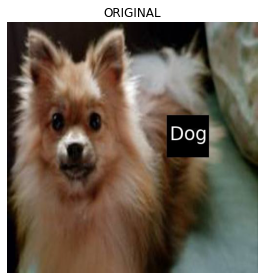

fig, (ax1) = plt.subplots(1,1)

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.set_title("ORIGINAL")

fig.set_figwidth(4)

fig.set_figheight(4)

fig.tight_layout()

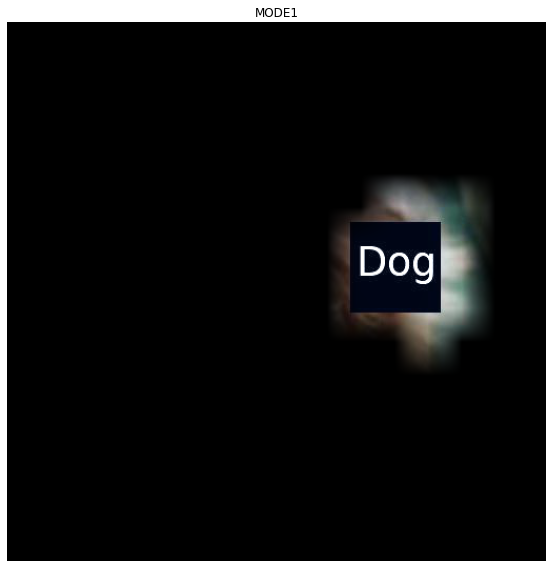

#

fig, (ax1, ax2) = plt.subplots(1,2)

(x12*0.3).squeeze().show(ax=ax1) #MODE1

(x1*0.3).squeeze().show(ax=ax2) #MODE1_res

ax1.set_title("MODE1")

ax2.set_title("MODE1 RES")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

x1 = x1.reshape(1,3,512,512)net1.to('cpu')

net2.to('cpu')Sequential(

(0): AdaptiveAvgPool2d(output_size=1)

(1): Flatten(start_dim=1, end_dim=-1)

(2): Linear(in_features=512, out_features=2, bias=False)

)camimg1 = torch.einsum('ij,jkl -> ikl', net2[2].weight, net1(x1).squeeze())power_threshed3=np.array(ebayesthresh(FloatVector(torch.tensor(camimg1[0].detach().reshape(-1))**2)))

ybar_threshed3 = np.where(power_threshed3>10,torch.tensor(camimg1[0].detach().reshape(-1)),0)

ybar_threshed3 = torch.tensor(ybar_threshed3.reshape(16,16))

power_threshed4=np.array(ebayesthresh(FloatVector(torch.tensor(camimg1[1].detach().reshape(-1))**2)))

ybar_threshed4 = np.where(power_threshed4>10,torch.tensor(camimg1[1].detach().reshape(-1)),0)

ybar_threshed4 = torch.tensor(ybar_threshed4.reshape(16,16))UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

power_threshed3=np.array(ebayesthresh(FloatVector(torch.tensor(camimg1[0].detach().reshape(-1))**2)))

<ipython-input-292-292f842a7fbc>:2: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

ybar_threshed3 = np.where(power_threshed3>10,torch.tensor(camimg1[0].detach().reshape(-1)),0)

<ipython-input-292-292f842a7fbc>:5: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

power_threshed4=np.array(ebayesthresh(FloatVector(torch.tensor(camimg1[1].detach().reshape(-1))**2)))

<ipython-input-292-292f842a7fbc>:6: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

ybar_threshed4 = np.where(power_threshed4>10,torch.tensor(camimg1[1].detach().reshape(-1)),0)a1,b1 = net(x1).tolist()[0]np.exp(a1)/ (np.exp(a1)+np.exp(b1)) , np.exp(b1)/ (np.exp(a1)+np.exp(b1))(0.005690363539499261, 0.9943096364605006)- mode1 res

fig, (ax1,ax2) = plt.subplots(1,2)

#

(x1*0.25).squeeze().show(ax=ax1)

ax1.imshow(ybar_threshed3,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax1.set_title("CAT PART")

#

(x1*0.25).squeeze().show(ax=ax2)

ax2.imshow(ybar_threshed4,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("DOG PART")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

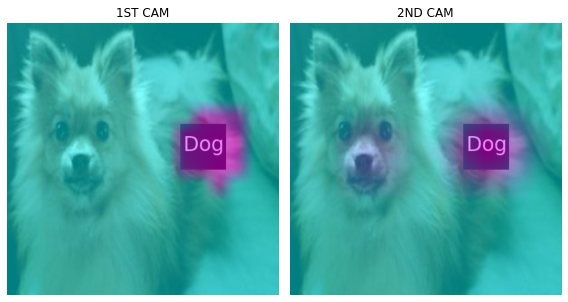

- 첫번째 CAM 결과와 비교

fig, (ax1,ax2) = plt.subplots(1,2)

#

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.imshow(ybar_threshed2,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax1.set_title("1ST CAM")

#

dls.train.decode((x,))[0].squeeze().show(ax=ax2)

ax2.imshow(ybar_threshed4,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("2ND CAM")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()

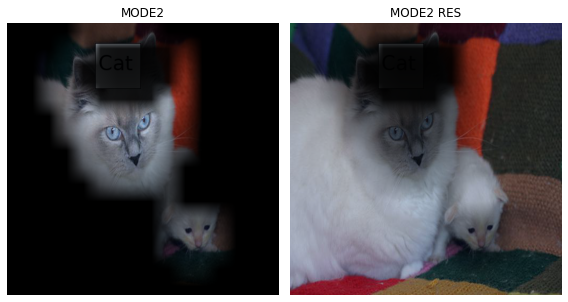

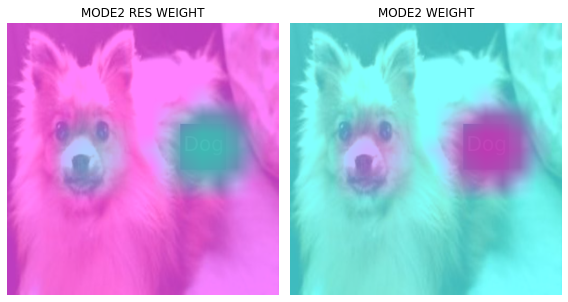

- 2nd CAM 분리

# test1=camimg1[1]-torch.min(camimg1[1])

A3 = torch.exp(-0.05*(ybar_threshed4))

A4 = 1 - A3fig, (ax1,ax2) = plt.subplots(1,2)

#

x1.squeeze().show(ax=ax2)

dls.train.decode((x1,))[0].squeeze().show(ax=ax1)

ax1.imshow(A3.data.to("cpu").detach(),alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax1.set_title("MODE2 RES WEIGHT")

#

x1.squeeze().show(ax=ax2)

dls.train.decode((x1,))[0].squeeze().show(ax=ax2)

ax2.imshow(A4.data.to("cpu").detach(),alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("MODE2 WEIGHT")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

# res

X2=np.array(A3.to("cpu").detach(),dtype=np.float32)

Y2=torch.Tensor(cv2.resize(X2,(512,512),interpolation=cv2.INTER_LINEAR))

x2=(x1*0.2)*Y2-torch.min((x1*0.2)*Y2)

#

X22=np.array(A4.to("cpu").detach(),dtype=np.float32)

Y22=torch.Tensor(cv2.resize(X22,(512,512),interpolation=cv2.INTER_LINEAR))

x22=(x1*0.2)*Y22-torch.min((x1*0.2)*Y22)fig, (ax1) = plt.subplots(1,1)

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.set_title("ORIGINAL")

fig.set_figwidth(4)

fig.set_figheight(4)

fig.tight_layout()

#

fig, (ax1, ax2) = plt.subplots(1,2)

(x12*0.3).squeeze().show(ax=ax1) #MODE1

(x1*0.2).squeeze().show(ax=ax2) #MODE1_res

ax1.set_title("MODE1")

ax2.set_title("MODE1 RES")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()

#

fig, (ax1, ax2) = plt.subplots(1,2)

(x22*3).squeeze().show(ax=ax1) #MODE2

(x2).squeeze().show(ax=ax2) #MODE2_res

ax1.set_title("MODE2")

ax2.set_title("MODE2 RES")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

x2 = x2.reshape(1,3,512,512)net1.to('cpu')

net2.to('cpu')Sequential(

(0): AdaptiveAvgPool2d(output_size=1)

(1): Flatten(start_dim=1, end_dim=-1)

(2): Linear(in_features=512, out_features=2, bias=False)

)camimg2 = torch.einsum('ij,jkl -> ikl', net2[2].weight, net1(x2).squeeze())power_threshed5=np.array(ebayesthresh(FloatVector(torch.tensor(camimg2[0].detach().reshape(-1))**2)))

ybar_threshed5 = np.where(power_threshed5>4,torch.tensor(camimg2[0].detach().reshape(-1)),0)

ybar_threshed5 = torch.tensor(ybar_threshed5.reshape(16,16))

power_threshed6=np.array(ebayesthresh(FloatVector(torch.tensor(camimg2[1].detach().reshape(-1))**2)))

ybar_threshed6 = np.where(power_threshed6>4,torch.tensor(camimg1[1].detach().reshape(-1)),0)

ybar_threshed6 = torch.tensor(ybar_threshed6.reshape(16,16))UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

power_threshed5=np.array(ebayesthresh(FloatVector(torch.tensor(camimg2[0].detach().reshape(-1))**2)))

<ipython-input-307-25e0375ebe18>:2: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

ybar_threshed5 = np.where(power_threshed5>4,torch.tensor(camimg2[0].detach().reshape(-1)),0)

<ipython-input-307-25e0375ebe18>:5: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

power_threshed6=np.array(ebayesthresh(FloatVector(torch.tensor(camimg2[1].detach().reshape(-1))**2)))

<ipython-input-307-25e0375ebe18>:6: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

ybar_threshed6 = np.where(power_threshed6>4,torch.tensor(camimg1[1].detach().reshape(-1)),0)a2,b2 = net(x2).tolist()[0]

np.exp(a2)/(np.exp(a2)+np.exp(b2)), np.exp(b2)/(np.exp(a2)+np.exp(b2))(0.7995345644391025, 0.2004654355608974)- mode2 res 에 CAM 결과 올리기

fig, (ax1, ax2) = plt.subplots(1,2)

#

x2.squeeze().show(ax=ax1)

ax1.imshow(ybar_threshed5,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax1.set_title("CAT PART")

#

x2.squeeze().show(ax=ax2)

ax2.imshow(ybar_threshed6,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("DOG PART")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()

fig, (ax1,ax2,ax3) = plt.subplots(1,3)

#

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.imshow(ybar_threshed2,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax1.set_title("1ST CAM")

#

dls.train.decode((x,))[0].squeeze().show(ax=ax2)

ax2.imshow(ybar_threshed4,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("2ND CAM")

#

dls.train.decode((x,))[0].squeeze().show(ax=ax3)

ax3.imshow(ybar_threshed6,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax3.set_title("3RD CAM")

fig.set_figwidth(12)

fig.set_figheight(12)

fig.tight_layout()

mode 3 만들기 더이상 분리되지 않는 듯

# test2=camimg2[1]-torch.min(camimg2[1])A5 = torch.exp(-0.05*(ybar_threshed6))A6 = 1 - A5fig, (ax1, ax2) = plt.subplots(1,2)

#

x2.squeeze().show(ax=ax1)

ax1.imshow(ybar_threshed5,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax1.set_title("CAT PART")

#

x2.squeeze().show(ax=ax2)

ax2.imshow(ybar_threshed6,alpha=0.5,extent=(0,511,511,0),interpolation='bilinear',cmap='cool')

ax2.set_title("DOG PART")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()

#mode 3 res

X3=np.array(A5.to("cpu").detach(),dtype=np.float32)

Y3=torch.Tensor(cv2.resize(X3,(512,512),interpolation=cv2.INTER_LINEAR))

x3=x2*Y3-torch.min(x2*Y3)

# mode 3

X32=np.array(A6.to("cpu").detach(),dtype=np.float32)

Y32=torch.Tensor(cv2.resize(X32,(512,512),interpolation=cv2.INTER_LINEAR))

x32=x2*Y32-torch.min(x2*Y32)fig, (ax1) = plt.subplots(1,1)

dls.train.decode((x,))[0].squeeze().show(ax=ax1)

ax1.set_title("ORIGINAL")

fig.set_figwidth(4)

fig.set_figheight(4)

fig.tight_layout()

#

fig, (ax1, ax2) = plt.subplots(1,2)

(x12*0.3).squeeze().show(ax=ax1) #MODE1

(x1*0.2).squeeze().show(ax=ax2) #MODE1_res

ax1.set_title("MODE1")

ax2.set_title("MODE1 RES")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()

#

fig, (ax1, ax2) = plt.subplots(1,2)

(x22*4).squeeze().show(ax=ax1) #MODE2

(x2).squeeze().show(ax=ax2) #MODE2_res

ax1.set_title("MODE2")

ax2.set_title("MODE2 RES")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()

#

fig, (ax1, ax2) = plt.subplots(1,2)

(x32*8).squeeze().show(ax=ax1) #MODE3

(x3).squeeze().show(ax=ax2) #MODE3_res

ax1.set_title("MODE3")

ax2.set_title("MODE3 RES")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

fig, (ax1) = plt.subplots(1,1)

(x12*0.3).squeeze().show(ax=ax1) #MODE1

ax1.set_title("MODE1")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

fig, (ax1) = plt.subplots(1,1)

(x12*0.3 + x22*4).squeeze().show(ax=ax1) #MODE1+MODE2

ax1.set_title("MODE1+MODE2")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

fig, (ax1) = plt.subplots(1,1)

(x12*0.3 + x22*4 + x32*2).squeeze().show(ax=ax1) #MODE1+MODE2+MODE3

ax1.set_title("MODE3+MODE2+MODE3")

fig.set_figwidth(8)

fig.set_figheight(8)

fig.tight_layout()Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).