{'W': array([[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.]]),

'x': array([ 0.26815193, -0.58456893, -0.02730755, ..., 0.15397547,

-0.45056488, -0.29405249]),

'y': array([ 0.39314334, 0.63468595, 0.33280949, ..., 0.80205526,

0.6207154 , -0.40187451]),

'z': array([-0.13834514, -0.22438843, 0.08658215, ..., 0.33698514,

0.58353051, -0.08647485]),

'fnoise': array([-1.63569131, 0.49423926, -1.04026277, ..., -1.0694093 ,

-0.24395499, 0.41729667]),

'f': array([-1.54422488, -0.03596483, -0.93972715, ..., -0.01924028,

-0.02470869, -0.26266752]),

'noise': array([-0.09146643, 0.53020409, -0.10053563, ..., -1.05016902,

-0.2192463 , 0.67996419]),

'unif': array([0., 0., 0., ..., 0., 0., 0.]),

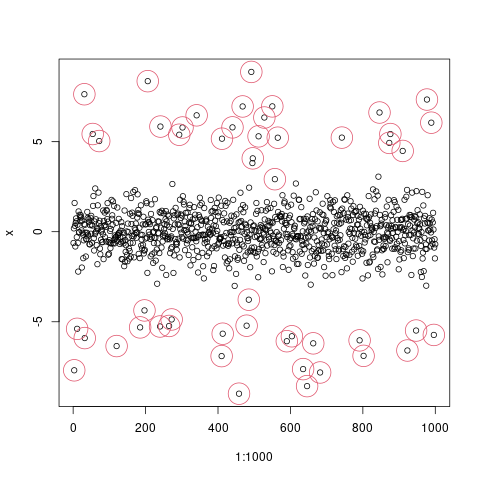

'index_of_trueoutlier2': (array([ 15, 33, 34, 36, 45, 52, 61, 153, 227, 228, 235,

240, 249, 267, 270, 273, 291, 313, 333, 353, 375, 389,

397, 402, 439, 440, 447, 449, 456, 457, 472, 509, 564,

569, 589, 638, 700, 713, 714, 732, 749, 814, 836, 851,

858, 888, 910, 927, 934, 948, 953, 972, 986, 1002, 1041,

1073, 1087, 1090, 1139, 1182, 1227, 1270, 1276, 1344, 1347, 1459,

1461, 1467, 1499, 1500, 1512, 1515, 1544, 1562, 1610, 1637, 1640,

1649, 1665, 1695, 1699, 1737, 1740, 1783, 1788, 1808, 1857, 1868,

1882, 1928, 1941, 1954, 1973, 2014, 2017, 2020, 2065, 2108, 2115,

2135, 2153, 2191, 2198, 2210, 2219, 2241, 2274, 2278, 2283, 2292,

2314, 2328, 2340, 2341, 2357, 2387, 2399, 2477, 2485, 2487]),)}