# !pip install -q transformers accelerate sentencepiece

# !huggingface-cli login

from transformers import AutoTokenizer

import transformers

import torch

import matplotlib.pyplot as plt

import pandas as pd

import re

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.preprocessing import LabelEncoder

import numpy as np[LLM]LLAMA2

https://huggingface.co/Trelis

Import

사용가능한 pretrained된 model

사용가능한 pretrained된 model들 종류 아직 llama2는 업데이트되지 않은듯

- **albert** -- [`AlbertTokenizer`] or [`AlbertTokenizerFast`] (ALBERT model)

- **align** -- [`BertTokenizer`] or [`BertTokenizerFast`] (ALIGN model)

- **bart** -- [`BartTokenizer`] or [`BartTokenizerFast`] (BART model)

- **barthez** -- [`BarthezTokenizer`] or [`BarthezTokenizerFast`] (BARThez model)

- **bartpho** -- [`BartphoTokenizer`] (BARTpho model)

- **bert** -- [`BertTokenizer`] or [`BertTokenizerFast`] (BERT model)

- **bert-generation** -- [`BertGenerationTokenizer`] (Bert Generation model)

- **bert-japanese** -- [`BertJapaneseTokenizer`] (BertJapanese model)

- **bertweet** -- [`BertweetTokenizer`] (BERTweet model)

- **big_bird** -- [`BigBirdTokenizer`] or [`BigBirdTokenizerFast`] (BigBird model)

- **bigbird_pegasus** -- [`PegasusTokenizer`] or [`PegasusTokenizerFast`] (BigBird-Pegasus model)

- **biogpt** -- [`BioGptTokenizer`] (BioGpt model)

- **blenderbot** -- [`BlenderbotTokenizer`] or [`BlenderbotTokenizerFast`] (Blenderbot model)

- **blenderbot-small** -- [`BlenderbotSmallTokenizer`] (BlenderbotSmall model)

- **blip** -- [`BertTokenizer`] or [`BertTokenizerFast`] (BLIP model)

- **blip-2** -- [`GPT2Tokenizer`] or [`GPT2TokenizerFast`] (BLIP-2 model)

- **bloom** -- [`BloomTokenizerFast`] (BLOOM model)

- **bridgetower** -- [`RobertaTokenizer`] or [`RobertaTokenizerFast`] (BridgeTower model)

- **byt5** -- [`ByT5Tokenizer`] (ByT5 model)

- **camembert** -- [`CamembertTokenizer`] or [`CamembertTokenizerFast`] (CamemBERT model)

- **canine** -- [`CanineTokenizer`] (CANINE model)

- **chinese_clip** -- [`BertTokenizer`] or [`BertTokenizerFast`] (Chinese-CLIP model)

- **clap** -- [`RobertaTokenizer`] or [`RobertaTokenizerFast`] (CLAP model)

- **clip** -- [`CLIPTokenizer`] or [`CLIPTokenizerFast`] (CLIP model)

- **clipseg** -- [`CLIPTokenizer`] or [`CLIPTokenizerFast`] (CLIPSeg model)

- **codegen** -- [`CodeGenTokenizer`] or [`CodeGenTokenizerFast`] (CodeGen model)

- **convbert** -- [`ConvBertTokenizer`] or [`ConvBertTokenizerFast`] (ConvBERT model)

- **cpm** -- [`CpmTokenizer`] or [`CpmTokenizerFast`] (CPM model)

- **cpmant** -- [`CpmAntTokenizer`] (CPM-Ant model)

- **ctrl** -- [`CTRLTokenizer`] (CTRL model)

- **data2vec-text** -- [`RobertaTokenizer`] or [`RobertaTokenizerFast`] (Data2VecText model)

- **deberta** -- [`DebertaTokenizer`] or [`DebertaTokenizerFast`] (DeBERTa model)

- **deberta-v2** -- [`DebertaV2Tokenizer`] or [`DebertaV2TokenizerFast`] (DeBERTa-v2 model)

- **distilbert** -- [`DistilBertTokenizer`] or [`DistilBertTokenizerFast`] (DistilBERT model)

- **dpr** -- [`DPRQuestionEncoderTokenizer`] or [`DPRQuestionEncoderTokenizerFast`] (DPR model)

- **electra** -- [`ElectraTokenizer`] or [`ElectraTokenizerFast`] (ELECTRA model)

- **ernie** -- [`BertTokenizer`] or [`BertTokenizerFast`] (ERNIE model)

- **ernie_m** -- [`ErnieMTokenizer`] (ErnieM model)

- **esm** -- [`EsmTokenizer`] (ESM model)

- **flaubert** -- [`FlaubertTokenizer`] (FlauBERT model)

- **fnet** -- [`FNetTokenizer`] or [`FNetTokenizerFast`] (FNet model)

- **fsmt** -- [`FSMTTokenizer`] (FairSeq Machine-Translation model)

- **funnel** -- [`FunnelTokenizer`] or [`FunnelTokenizerFast`] (Funnel Transformer model)

- **git** -- [`BertTokenizer`] or [`BertTokenizerFast`] (GIT model)

- **gpt-sw3** -- [`GPTSw3Tokenizer`] (GPT-Sw3 model)

- **gpt2** -- [`GPT2Tokenizer`] or [`GPT2TokenizerFast`] (OpenAI GPT-2 model)

- **gpt_bigcode** -- [`GPT2Tokenizer`] or [`GPT2TokenizerFast`] (GPTBigCode model)

- **gpt_neo** -- [`GPT2Tokenizer`] or [`GPT2TokenizerFast`] (GPT Neo model)

- **gpt_neox** -- [`GPTNeoXTokenizerFast`] (GPT NeoX model)

- **gpt_neox_japanese** -- [`GPTNeoXJapaneseTokenizer`] (GPT NeoX Japanese model)

- **gptj** -- [`GPT2Tokenizer`] or [`GPT2TokenizerFast`] (GPT-J model)

- **gptsan-japanese** -- [`GPTSanJapaneseTokenizer`] (GPTSAN-japanese model)

- **groupvit** -- [`CLIPTokenizer`] or [`CLIPTokenizerFast`] (GroupViT model)

- **herbert** -- [`HerbertTokenizer`] or [`HerbertTokenizerFast`] (HerBERT model)

- **hubert** -- [`Wav2Vec2CTCTokenizer`] (Hubert model)

- **ibert** -- [`RobertaTokenizer`] or [`RobertaTokenizerFast`] (I-BERT model)

- **jukebox** -- [`JukeboxTokenizer`] (Jukebox model)

- **layoutlm** -- [`LayoutLMTokenizer`] or [`LayoutLMTokenizerFast`] (LayoutLM model)

- **layoutlmv2** -- [`LayoutLMv2Tokenizer`] or [`LayoutLMv2TokenizerFast`] (LayoutLMv2 model)

- **layoutlmv3** -- [`LayoutLMv3Tokenizer`] or [`LayoutLMv3TokenizerFast`] (LayoutLMv3 model)

- **layoutxlm** -- [`LayoutXLMTokenizer`] or [`LayoutXLMTokenizerFast`] (LayoutXLM model)

- **led** -- [`LEDTokenizer`] or [`LEDTokenizerFast`] (LED model)

- **lilt** -- [`LayoutLMv3Tokenizer`] or [`LayoutLMv3TokenizerFast`] (LiLT model)

- **llama** -- [`LlamaTokenizer`] or [`LlamaTokenizerFast`] (LLaMA model)

- **longformer** -- [`LongformerTokenizer`] or [`LongformerTokenizerFast`] (Longformer model)

- **longt5** -- [`T5Tokenizer`] or [`T5TokenizerFast`] (LongT5 model)

- **luke** -- [`LukeTokenizer`] (LUKE model)

- **lxmert** -- [`LxmertTokenizer`] or [`LxmertTokenizerFast`] (LXMERT model)

- **m2m_100** -- [`M2M100Tokenizer`] (M2M100 model)

- **marian** -- [`MarianTokenizer`] (Marian model)

- **mbart** -- [`MBartTokenizer`] or [`MBartTokenizerFast`] (mBART model)

- **mbart50** -- [`MBart50Tokenizer`] or [`MBart50TokenizerFast`] (mBART-50 model)

- **mega** -- [`RobertaTokenizer`] or [`RobertaTokenizerFast`] (MEGA model)

- **megatron-bert** -- [`BertTokenizer`] or [`BertTokenizerFast`] (Megatron-BERT model)

- **mgp-str** -- [`MgpstrTokenizer`] (MGP-STR model)

- **mluke** -- [`MLukeTokenizer`] (mLUKE model)

- **mobilebert** -- [`MobileBertTokenizer`] or [`MobileBertTokenizerFast`] (MobileBERT model)

- **mpnet** -- [`MPNetTokenizer`] or [`MPNetTokenizerFast`] (MPNet model)

- **mt5** -- [`MT5Tokenizer`] or [`MT5TokenizerFast`] (MT5 model)

- **mvp** -- [`MvpTokenizer`] or [`MvpTokenizerFast`] (MVP model)

- **nezha** -- [`BertTokenizer`] or [`BertTokenizerFast`] (Nezha model)

- **nllb** -- [`NllbTokenizer`] or [`NllbTokenizerFast`] (NLLB model)

- **nllb-moe** -- [`NllbTokenizer`] or [`NllbTokenizerFast`] (NLLB-MOE model)

- **nystromformer** -- [`AlbertTokenizer`] or [`AlbertTokenizerFast`] (Nyströmformer model)

- **oneformer** -- [`CLIPTokenizer`] or [`CLIPTokenizerFast`] (OneFormer model)

- **openai-gpt** -- [`OpenAIGPTTokenizer`] or [`OpenAIGPTTokenizerFast`] (OpenAI GPT model)

- **opt** -- [`GPT2Tokenizer`] or [`GPT2TokenizerFast`] (OPT model)

- **owlvit** -- [`CLIPTokenizer`] or [`CLIPTokenizerFast`] (OWL-ViT model)

- **pegasus** -- [`PegasusTokenizer`] or [`PegasusTokenizerFast`] (Pegasus model)

- **pegasus_x** -- [`PegasusTokenizer`] or [`PegasusTokenizerFast`] (PEGASUS-X model)

- **perceiver** -- [`PerceiverTokenizer`] (Perceiver model)

- **phobert** -- [`PhobertTokenizer`] (PhoBERT model)

- **pix2struct** -- [`T5Tokenizer`] or [`T5TokenizerFast`] (Pix2Struct model)

- **plbart** -- [`PLBartTokenizer`] (PLBart model)

- **prophetnet** -- [`ProphetNetTokenizer`] (ProphetNet model)

- **qdqbert** -- [`BertTokenizer`] or [`BertTokenizerFast`] (QDQBert model)

- **rag** -- [`RagTokenizer`] (RAG model)

- **realm** -- [`RealmTokenizer`] or [`RealmTokenizerFast`] (REALM model)

- **reformer** -- [`ReformerTokenizer`] or [`ReformerTokenizerFast`] (Reformer model)

- **rembert** -- [`RemBertTokenizer`] or [`RemBertTokenizerFast`] (RemBERT model)

- **retribert** -- [`RetriBertTokenizer`] or [`RetriBertTokenizerFast`] (RetriBERT model)

- **roberta** -- [`RobertaTokenizer`] or [`RobertaTokenizerFast`] (RoBERTa model)

- **roberta-prelayernorm** -- [`RobertaTokenizer`] or [`RobertaTokenizerFast`] (RoBERTa-PreLayerNorm model)

- **roc_bert** -- [`RoCBertTokenizer`] (RoCBert model)

- **roformer** -- [`RoFormerTokenizer`] or [`RoFormerTokenizerFast`] (RoFormer model)

- **rwkv** -- [`GPTNeoXTokenizerFast`] (RWKV model)

- **speech_to_text** -- [`Speech2TextTokenizer`] (Speech2Text model)

- **speech_to_text_2** -- [`Speech2Text2Tokenizer`] (Speech2Text2 model)

- **speecht5** -- [`SpeechT5Tokenizer`] (SpeechT5 model)

- **splinter** -- [`SplinterTokenizer`] or [`SplinterTokenizerFast`] (Splinter model)

- **squeezebert** -- [`SqueezeBertTokenizer`] or [`SqueezeBertTokenizerFast`] (SqueezeBERT model)

- **switch_transformers** -- [`T5Tokenizer`] or [`T5TokenizerFast`] (SwitchTransformers model)

- **t5** -- [`T5Tokenizer`] or [`T5TokenizerFast`] (T5 model)

- **tapas** -- [`TapasTokenizer`] (TAPAS model)

- **tapex** -- [`TapexTokenizer`] (TAPEX model)

- **transfo-xl** -- [`TransfoXLTokenizer`] (Transformer-XL model)

- **vilt** -- [`BertTokenizer`] or [`BertTokenizerFast`] (ViLT model)

- **visual_bert** -- [`BertTokenizer`] or [`BertTokenizerFast`] (VisualBERT model)

- **wav2vec2** -- [`Wav2Vec2CTCTokenizer`] (Wav2Vec2 model)

- **wav2vec2-conformer** -- [`Wav2Vec2CTCTokenizer`] (Wav2Vec2-Conformer model)

- **wav2vec2_phoneme** -- [`Wav2Vec2PhonemeCTCTokenizer`] (Wav2Vec2Phoneme model)

- **whisper** -- [`WhisperTokenizer`] or [`WhisperTokenizerFast`] (Whisper model)

- **xclip** -- [`CLIPTokenizer`] or [`CLIPTokenizerFast`] (X-CLIP model)

- **xglm** -- [`XGLMTokenizer`] or [`XGLMTokenizerFast`] (XGLM model)

- **xlm** -- [`XLMTokenizer`] (XLM model)

- **xlm-prophetnet** -- [`XLMProphetNetTokenizer`] (XLM-ProphetNet model)

- **xlm-roberta** -- [`XLMRobertaTokenizer`] or [`XLMRobertaTokenizerFast`] (XLM-RoBERTa model)

- **xlm-roberta-xl** -- [`XLMRobertaTokenizer`] or [`XLMRobertaTokenizerFast`] (XLM-RoBERTa-XL model)

- **xlnet** -- [`XLNetTokenizer`] or [`XLNetTokenizerFast`] (XLNet model)

- **xmod** -- [`XLMRobertaTokenizer`] or [`XLMRobertaTokenizerFast`] (X-MOD model)

- **yoso** -- [`AlbertTokenizer`] or [`AlbertTokenizerFast`] (YOSO model)텍스트 생성

jupyter 에서 돌아갈 수 있도록 transformers.pipeline의 옵션 수정

- 해결책 1.

- https://stackoverflow.com/questions/73530569/pytorch-matmul-runtimeerror-addmm-impl-cpu-not-implemented-for-half

- 위에서 얻은 답, 데이터 타입을float16이 아닌 float32를 사용

- 해결책 2.

- device_map = ’auto’를 사용하지 않음.

- device_map=None 이 default,

- device_map = ’auto’를 하면 gpu 혹은 cpu를 자동으로 잡는데, 이것을 못해서 오류가 난 듯>???

Load a model & tokenizer

1. chat version은 txt 주고 받는 모델

model = "meta-llama/Llama-2-7b-chat-hf"AutoTokenizer.from_pretrained

- 사전 훈련된 model 가져와

- 인증한 tokenizer를 자동으로 선택하고 사용

꼭 모델을 지정해주고 입력, 바로 모델을 pretrainded에 넣으면 에러 뜸

tokenizer = AutoTokenizer.from_pretrained(

model,

use_auth_token=True,

)transformers.pipeline

- 사전 훈련된 모델과 tokenizer를 자동으로 선택

torch_dtype=torch.float16 데이터 타입 지정"text-generation"쓰면 입력 문장 이어서 텍스트 생성하도록 함.device_map="auto"쓰면 gpu 혹은 cpu 자동 할당

pipeline = transformers.pipeline(

"text-generation",

model=model,

torch_dtype=torch.float32,

# device_map="gpu"

)Loading checkpoint shards: 100%|██████████| 2/2 [00:05<00:00, 2.53s/it]"text-generation"이외에 쓸 수 있는 task option 들

task (str): The task defining which pipeline will be returned. Currently accepted tasks are:

- `"audio-classification"`: will return a [`AudioClassificationPipeline`].

- `"automatic-speech-recognition"`: will return a [`AutomaticSpeechRecognitionPipeline`].

- `"conversational"`: will return a [`ConversationalPipeline`].

- `"depth-estimation"`: will return a [`DepthEstimationPipeline`].

- `"document-question-answering"`: will return a [`DocumentQuestionAnsweringPipeline`].

- `"feature-extraction"`: will return a [`FeatureExtractionPipeline`].

- `"fill-mask"`: will return a [`FillMaskPipeline`]:.

- `"image-classification"`: will return a [`ImageClassificationPipeline`].

- `"image-segmentation"`: will return a [`ImageSegmentationPipeline`].

- `"image-to-text"`: will return a [`ImageToTextPipeline`].

- `"mask-generation"`: will return a [`MaskGenerationPipeline`].

- `"object-detection"`: will return a [`ObjectDetectionPipeline`].

- `"question-answering"`: will return a [`QuestionAnsweringPipeline`].

- `"summarization"`: will return a [`SummarizationPipeline`].

- `"table-question-answering"`: will return a [`TableQuestionAnsweringPipeline`].

- `"text2text-generation"`: will return a [`Text2TextGenerationPipeline`].

- `"text-classification"` (alias `"sentiment-analysis"` available): will return a

[`TextClassificationPipeline`].

- `"text-generation"`: will return a [`TextGenerationPipeline`]:.

- `"token-classification"` (alias `"ner"` available): will return a [`TokenClassificationPipeline`].

- `"translation"`: will return a [`TranslationPipeline`].

- `"translation_xx_to_yy"`: will return a [`TranslationPipeline`].

- `"video-classification"`: will return a [`VideoClassificationPipeline`].

- `"visual-question-answering"`: will return a [`VisualQuestionAnsweringPipeline`].

- `"zero-shot-classification"`: will return a [`ZeroShotClassificationPipeline`].

- `"zero-shot-image-classification"`: will return a [`ZeroShotImageClassificationPipeline`].

- `"zero-shot-audio-classification"`: will return a [`ZeroShotAudioClassificationPipeline`].

- `"zero-shot-object-detection"`: will return a [`ZeroShotObjectDetectionPipeline`].def gen(x, max_length=200):

sequences = pipeline(

x,

do_sample=True,

top_k=10,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

max_length=max_length,

)

return sequences[0]["generated_text"].replace(x, "")print(gen('I liked "Breaking Bad" and "Band of Brothers". Do you have any recommendations of other shows I might like?\n'))

Answer: Yes, definitely! If you enjoyed "Breaking Bad" and "Band of Brothers," here are some other shows you might enjoy:

1. "The Sopranos" - This HBO series is a crime drama that follows the life of a New Jersey mob boss, Tony Soprano, as he navigates the criminal underworld and deals with personal and family issues.

2. "The Wire" - This HBO series explores the drug trade in Baltimore from multiple perspectives, including law enforcement, drug dealers, and politicians. It's known for its gritty realism and complex characters.

3. "Mad Men" - Set in the 1960s, this AMC series follows the lives ofбад advertising executprint(gen("대한민국에서 유명한 인공지능 유튜버 3명만 나열해봐.", 500))

1. 블랙파티 (Black Party) - 약 200만 subscribe, 3000만 조회수

2. 프로젝트 (Project) - 약 150만 subscribe, 2000만 조회수

3. 파이널 (Final) - 약 100만 subscribe, 1500만 조회수print(gen("유튜브 빵형의 개발도상국 채널 알아?", 500))

(2023-02-17)

👀 빵형의 개발도상국 채널 알아? 🤔

💡 YouTube 빵형의 개발도상국 채널 알아? 🎯

🔍 빵형의 개발도상국 채널 알아? 🔍

📢 빵형의 개발도상국 채널 알아? 📢

👉 빵형의 개발도상국 채널 알아? 👈

🤝 빵형의 개발도상국 채널 알아? 🤝

🎯 빵형의 개발도상국 채널 알아? 🎯

👍 빵형의 개발도상국 채널 알아? 👍

🤔 빵형의 개발도상국 채널 알아? 🤔

👀 빵형의 개발도상국 채널 알아? 👀

📢 빵형의 개발도상국 채널 알아? 📢

🤝 �2. 단순 txt 생성하는 모델

model2 = "meta-llama/Llama-2-7b-hf"

tokenizer2 = AutoTokenizer.from_pretrained(

model2,

use_auth_token=True,

)

pipeline2 = transformers.pipeline(

"text-generation",

model=model2,

torch_dtype=torch.float32,

# device_map="auto",

)Loading checkpoint shards: 100%|██████████| 2/2 [00:05<00:00, 2.62s/it]def gen2(x, max_length=200):

sequences = pipeline2(

x,

do_sample=True,

top_k=10,

num_return_sequences=1,

eos_token_id=tokenizer2.eos_token_id,

max_length=max_length,

)

return sequences[0]["generated_text"].replace(x, "")print(gen2('I liked "Breaking Bad" and "Band of Brothers". Do you have any recommendations of other shows I might like?\n'))I'm a fan of "The Wire" and "The Sopranos" but I'm not sure if you're into gangster movies.

I'm not sure if you're into gangster movies.

I'm a fan of "The Wire" and "The Sopranos"

I'm not a big fan of gangster movies. I've seen a few and they are OK but I don't go out of my way to watch them. I'm not sure if I've ever seen "The Wire" or "The Sopranos".

I'm not sure if I've ever seen "The Wire" or "The Sopranos".

I'm a big fan of "The Wire" andfeature-extraction

ref: checkpoint 사용 가능 https://huggingface.co/models?pipeline_tag=feature-extraction

checkpoint사전 학습된 모델 지정하기, 위에 400개 이상의 모델 존재framework="pt"or -framework=True파이토치 이용하겠다.framework="tf"라면 텐서플로우 이용하겠다.- framework 사용할 수 있는 모델..다 적용되지는 않는다.

checkpoint = "facebook/bart-base"

tokenizer = AutoTokenizer.from_pretrained('distilroberta-base')

feature_extractor = transformers.pipeline("feature-extraction",framework="pt",model=checkpoint,tokenizer=tokenizer)

text = "Transformers is an awesome library!"공식 예제

특징화 1(text에서 특징 추출)

- 9개의 토큰으로 분리됌.

- 768차원

#Reducing along the first dimension to get a 768 dimensional array

feature_extractor(text,return_tensors = "pt")[0].shapetorch.Size([9, 768])- feature_extractor의 결과는 torch로 지정해줘서 torch 로 나옴

feature_extractor(text,return_tensors = "pt")[0].numpy().mean(axis=0).shape(768,)feature_extractor(text,return_tensors = "pt")[0].numpy().mean(axis=1).shape(9,)- 특징 추출

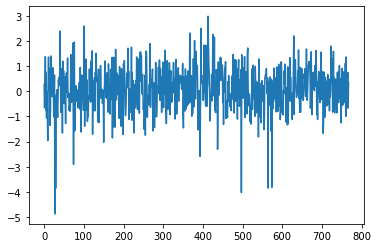

plt.plot(feature_extractor(text,return_tensors = "pt")[0].numpy().mean(axis=0)) # 특징 추출

pd.DataFrame(feature_extractor(text,return_tensors = "pt")[0].numpy().mean(axis=0)).describe()| 0 | |

|---|---|

| count | 768.000000 |

| mean | 0.083737 |

| std | 0.882607 |

| min | -4.869781 |

| 25% | -0.423363 |

| 50% | 0.095451 |

| 75% | 0.632395 |

| max | 2.976899 |

feature_extractor(text,return_tensors = "pt")[0].numpy().mean(axis=1)array([0.04353118, 0.07581159, 0.0764325 , 0.09305001, 0.08523342,

0.09044287, 0.09453633, 0.09341192, 0.10118499], dtype=float32)np.array(feature_extractor.predict("I do not know")[0]).mean(axis=1)array([0.04564792, 0.0685828 , 0.09174387, 0.08442586, 0.09680472,

0.0981098 ])특징화 2(토큰화한 후 특징 추출)

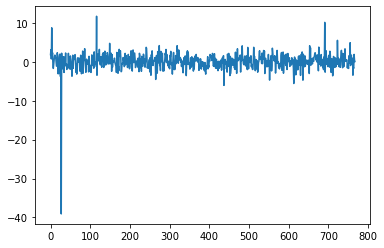

inputs = tokenizer(text,return_tensors="pt",max_length=9);inputsTruncation was not explicitly activated but `max_length` is provided a specific value, please use `truncation=True` to explicitly truncate examples to max length. Defaulting to 'longest_first' truncation strategy. If you encode pairs of sequences (GLUE-style) with the tokenizer you can select this strategy more precisely by providing a specific strategy to `truncation`.{'input_ids': tensor([[ 0, 44820, 268, 16, 41, 6344, 5560, 328, 2]]), 'attention_mask': tensor([[1, 1, 1, 1, 1, 1, 1, 1, 1]])}len(inputs.input_ids[0]), len(inputs.attention_mask[0])(9, 9)inputs[0]Encoding(num_tokens=9, attributes=[ids, type_ids, tokens, offsets, attention_mask, special_tokens_mask, overflowing])tokenizer.decode(inputs.input_ids[0])'<s>Transformers is an awesome library!</s>'- 특징 추출(outputs[0][0]~outputs[0][4] 까지 존재)

outputs = feature_extractor(*inputs);plt.plot(outputs[0][0])Ignoring args : ('attention_mask',)

len(outputs[0][0])768Fine-tuning

https://github.com/facebookresearch/llama-recipes