class Loader(object):

def __init__(self, data_dict):

self._dataset = data_dict

def _get_edges(self):

self._edges = np.array(self._dataset["edges"]).T

def _get_edge_weights(self):

# self._edge_weights = np.array(self._dataset["weights"]).T

edge_weights = np.array(self._dataset["weights"]).T

#scaled_edge_weights = minmaxscaler(edge_weights)

self._edge_weights = edge_weights

def _get_targets_and_features(self):

stacked_target = np.stack(self._dataset["FX"])

self.features = np.stack([

stacked_target[i : i + self.lags, :].T

for i in range(stacked_target.shape[0] - self.lags)

])

self.targets = np.stack([

stacked_target[i + self.lags, :].T

for i in range(stacked_target.shape[0] - self.lags)

])

def get_dataset(self, lags: int = 4) -> StaticGraphTemporalSignal:

self.lags = lags

self._get_edges()

self._get_edge_weights()

self._get_targets_and_features()

dataset = StaticGraphTemporalSignal(

self._edges, self._edge_weights, self.features, self.targets

)

dataset.node_ids = self._dataset['node_ids']

return dataset

class RecurrentGCN(torch.nn.Module):

def __init__(self, node_features, filters):

super(RecurrentGCN, self).__init__()

self.recurrent = GConvLSTM(in_channels = node_features, out_channels = filters, K = 2)

self.linear = torch.nn.Linear(filters, 1)

def forward(self, x, edge_index, edge_weight, h, c):

h_0, c_0 = self.recurrent(x, edge_index, edge_weight, h, c)

h = F.relu(h_0)

h = self.linear(h)

return h, h_0, c_0

# device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

device = torch.device('cpu')

class RGCN_Learner:

def __init__(self):

self.method = 'RecurrentGCN'

self.figs = []

self.epochs = 0

self.losses = []

self._node_idx = 0

def load(self,y):

if (self.lags is None) or (self.train_ratio is None):

self.lags = 4

self.train_ratio = 0.8

self.t,self.n = y.shape

dct = makedict(FX=y.tolist())

self.loader = Loader(dct)

self.dataset = self.loader.get_dataset(lags=self.lags)

self.X = torch.tensor(self.dataset.features).float()

self.y = torch.tensor(self.dataset.targets).float()

self.train_dataset, self.test_dataset = eptstgcn.utils.temporal_signal_split(self.dataset, train_ratio = self.train_ratio)

self.len_test = self.test_dataset.snapshot_count

self.len_tr = self.train_dataset.snapshot_count

#self.dataset_name = str(self.train_dataset) if dataset_name is None else dataset_name

def get_batches(self, batch_size=256):

num_batches = self.len_tr // batch_size + (1 if self.len_tr % batch_size != 0 else 0)

self.batches = []

for i in range(num_batches):

start_idx = i * batch_size

end_idx = start_idx + batch_size

self.batches.append(self.train_dataset[start_idx:end_idx])

def learn(self,epoch=1):

self.model.train()

for e in range(epoch):

losses_batch = []

for b,batch in enumerate(self.batches):

loss = 0

self.h, self.c = None, None

for t, snapshot in enumerate(batch):

snapshot = snapshot.to(device)

yt_hat, self.h, self.c = self.model(snapshot.x, snapshot.edge_index, snapshot.edge_attr, self.h, self.c)

loss = loss + torch.mean((yt_hat.reshape(-1)-snapshot.y.reshape(-1))**2)

print(f'\rbatch={b}\t t={t+1}\t loss={loss/(t+1)}\t', end='', flush=True)

loss = loss / (t+1)

loss.backward()

self.optimizer.step()

self.optimizer.zero_grad()

losses_batch.append(loss.item())

self.epochs = self.epochs + 1

print(f'\repoch={self.epochs}\t loss={np.mean(losses_batch)}\n', end='', flush=True)

self.losses.append(np.mean(losses_batch))

self._savefigs()

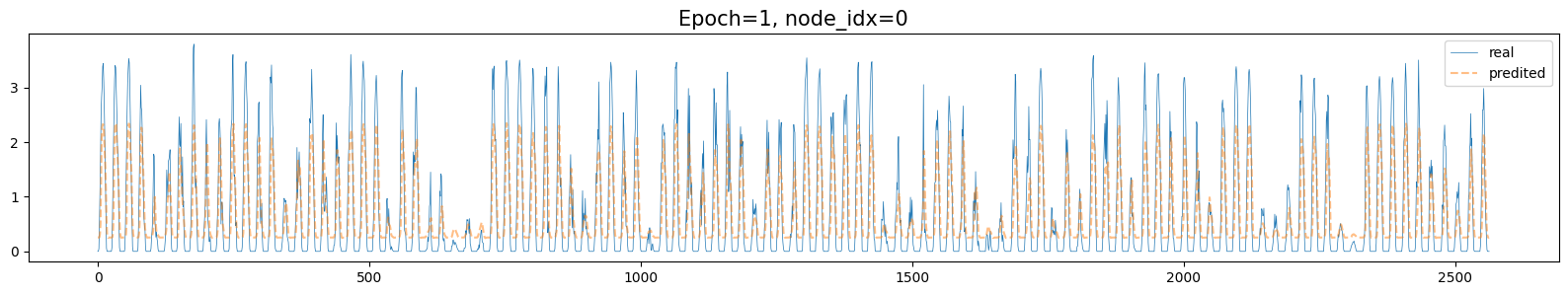

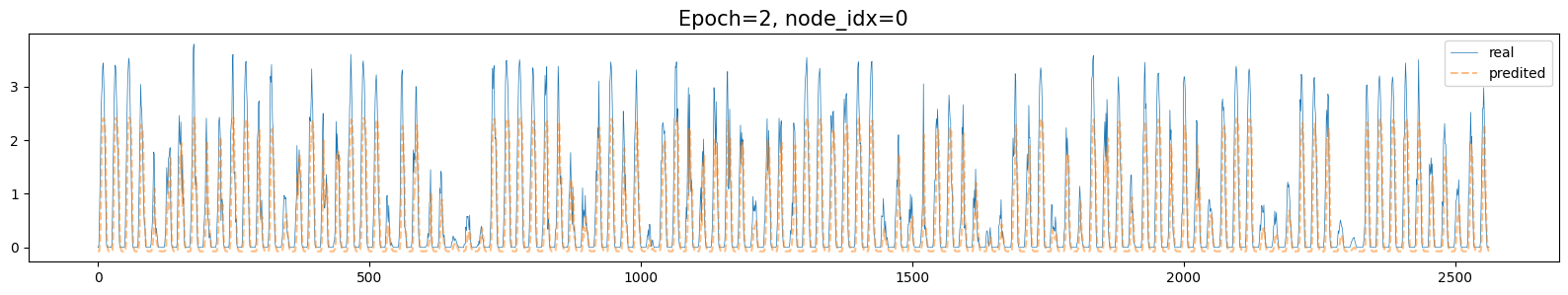

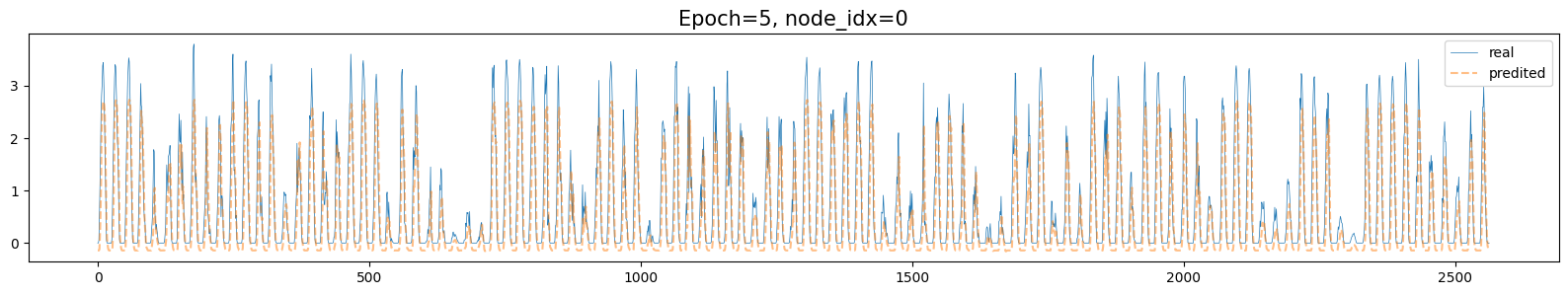

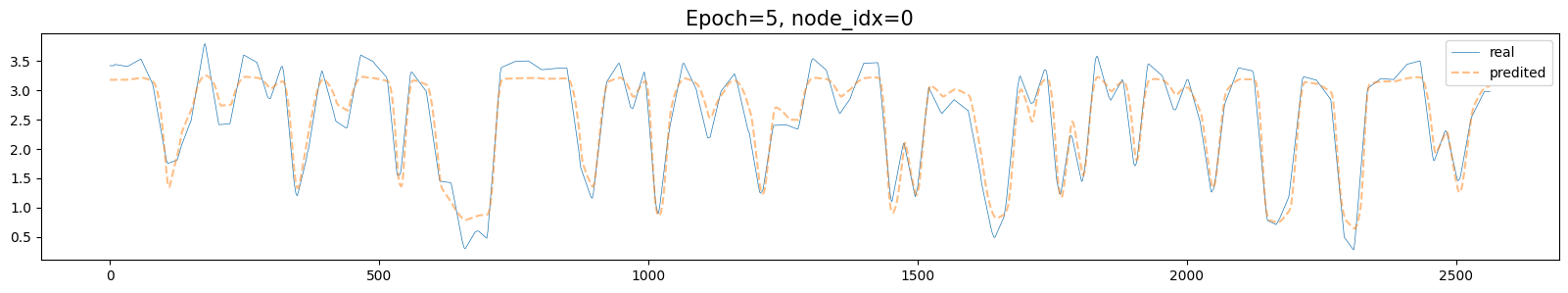

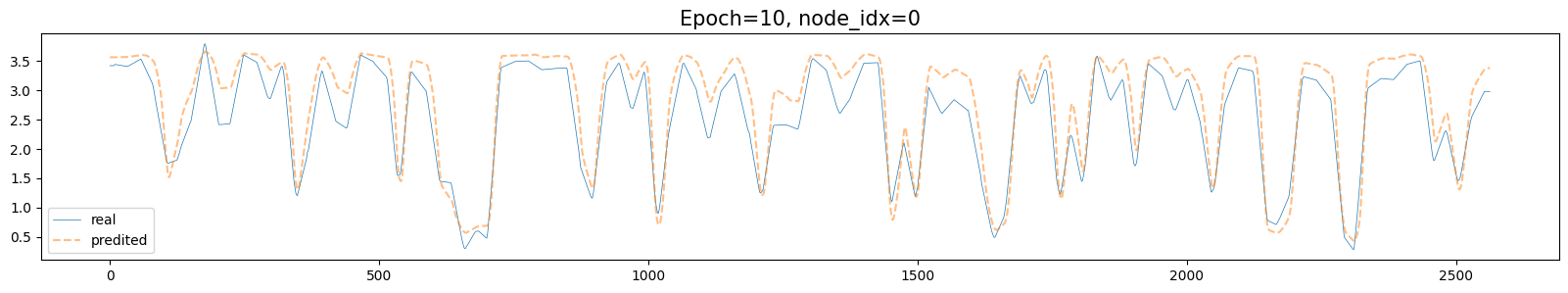

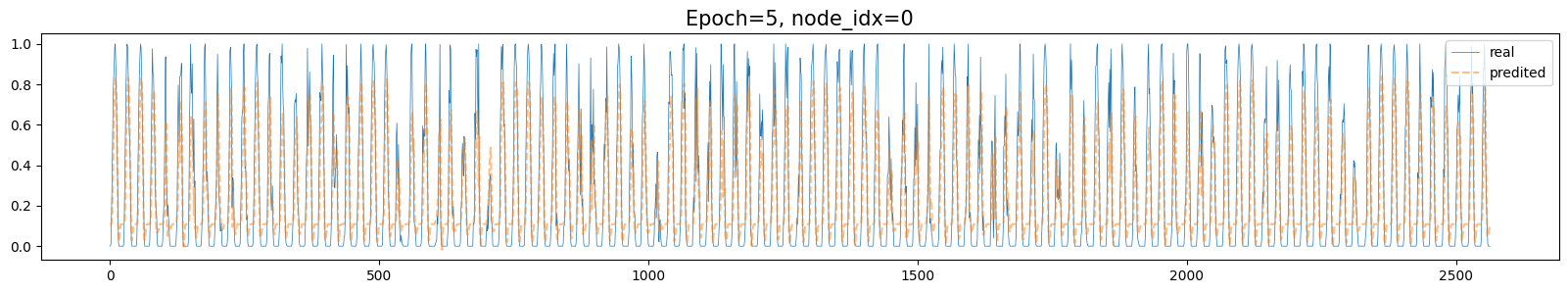

def _savefigs(self):

self.__call__()

self._node_idx

with plt.style.context('default'):

plt.ioff()

plt.rcParams['figure.figsize'] = [20, 3] # [가로 크기, 세로 크기]

fig,ax = plt.subplots()

ax.plot(self.y[:,self._node_idx],label='real',lw=0.5)

ax.plot(self.yhat[:,self._node_idx],'--',label='predited',alpha=0.5)

ax.set_title(f'Epoch={self.epochs}, node_idx={self._node_idx}',size=15)

ax.legend()

#mplcyberpunk.add_glow_effects()

self.figs.append(fig)

plt.close()

def __call__(self,dataset=None):

if dataset == None:

dataset = self.dataset

self.yhat = torch.stack([self.model(snapshot.x, snapshot.edge_index, snapshot.edge_attr, self.h, self.c)[0] for snapshot in dataset]).detach().squeeze().float()

return {'X':self.X, 'y':self.y, 'yhat':self.yhat}

# learn

# def rgcn(FX,train_ratio,lags,filters,epoch):

# dct = makedict(FX=FX.tolist())

# loader = Loader(dct)

# dataset = loader.get_dataset(lags=lags)

# dataset_tr, dataset_test = eptstgcn.utils.temporal_signal_split(dataset, train_ratio = train_ratio)

# lrnr = RGCN_Learner(dataset_tr, dataset_name = 'org & arbitrary')

# lrnr.learn(filters=filters, epoch=epoch)

# yhat = np.array(lrnr(dataset)['yhat'])

# yhat = np.concatenate([np.array([list(yhat[0])]*lags),yhat],axis=0)

# return yhat